Lecture 08

Generalized Measurement Models: Modeling Observed Data II

Generalized Measurement Models: Modeling Observed Data II

Educational Statistics and Research Methods

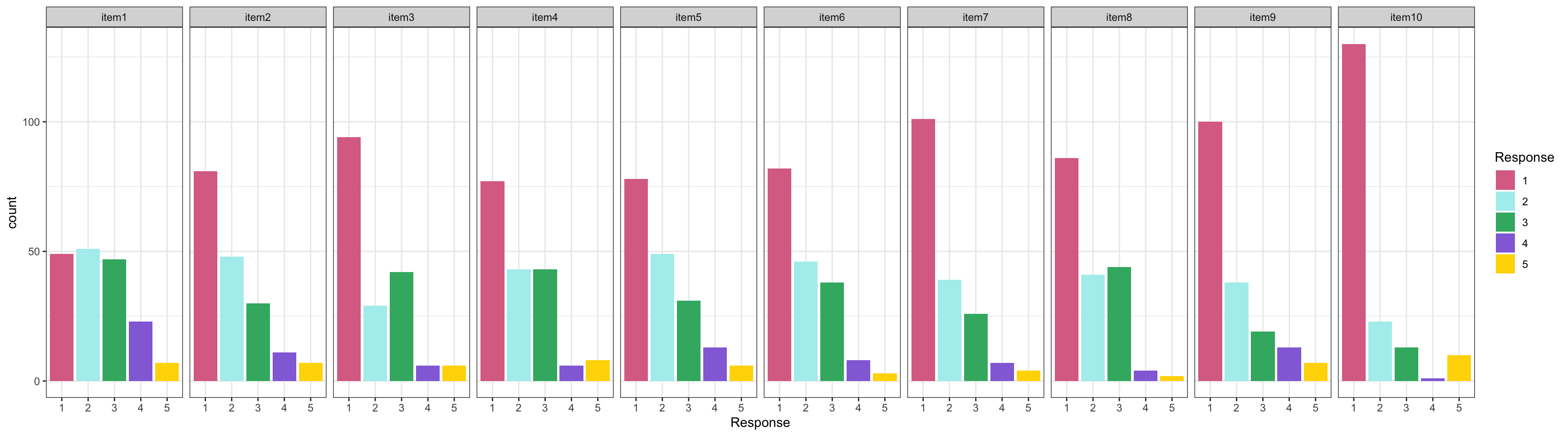

Conspiracy Theories── Attaching core tidyverse packages ──────────────────────── tidyverse 2.0.0 ──

✔ dplyr 1.1.4 ✔ readr 2.1.5

✔ forcats 1.0.0 ✔ stringr 1.5.1

✔ ggplot2 3.5.1 ✔ tibble 3.2.1

✔ lubridate 1.9.4 ✔ tidyr 1.3.1

✔ purrr 1.0.4

── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

✖ dplyr::filter() masks stats::filter()

✖ dplyr::lag() masks stats::lag()

ℹ Use the conflicted package (<http://conflicted.r-lib.org/>) to force all conflicts to become errors

here() starts at /Users/jihong/Documents/Projects/website-jihong

Loading required package: Rcpp

This is blavaan 0.5-8

On multicore systems, we suggest use of future::plan("multicore") or

future::plan("multisession") for faster post-MCMC computations.

Before moving onto the latent variable, let’s note the posterior distribution of item parameters (for a single item):

f(μi,λi,ψi∣Y)∝f(Y∣μi,λi,ψi)f(μi,λi,ψi)

where f(Y∣μi,λi,ψi) is the (joint) posterior distribution of the parameters for item i conditional on the adta

f(Y∣μi,λi,ψi) is the distribution we defined for our observed data:

f(Y∣μi,λi,ψi)∼N(μi+λiθp,ψi)

f(μi,λi,ψi) is the (joint) prior distribution for each of the parameters, which, are independent:

f(μi,λi,ψi)=f(μi)f(λi)f(ψi)

The estimated latent variables are then:

# A tibble: 177 × 10

variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 theta[1] 0.0221 0.0222 0.246 0.250 -0.386 0.419 1.00 5122. 5185.

2 theta[2] 1.53 1.53 0.257 0.254 1.11 1.96 1.00 3754. 4698.

3 theta[3] 1.71 1.71 0.264 0.263 1.27 2.14 1.00 3809. 4720.

4 theta[4] -0.927 -0.924 0.250 0.246 -1.34 -0.517 1.00 4936. 4752.

5 theta[5] 0.0443 0.0485 0.248 0.244 -0.368 0.449 1.00 5126. 4699.

6 theta[6] -0.976 -0.972 0.252 0.249 -1.40 -0.566 1.00 3786. 4650.

7 theta[7] -0.336 -0.338 0.254 0.249 -0.754 0.0846 1.00 4421. 4882.

8 theta[8] -0.0520 -0.0548 0.248 0.247 -0.460 0.349 1.00 5037. 5503.

9 theta[9] -0.785 -0.784 0.244 0.248 -1.19 -0.388 1.00 4633. 5479.

10 theta[10] 0.0477 0.0460 0.248 0.250 -0.357 0.455 1.00 5000. 4896.

# ℹ 167 more rowslavaanThe posterior distribution of the person parameters (the latent variable for a single person):

f(θp∣Y)∝f(Y∣θp)f(θp)

Here:

f(θp∣Y) is the posterior distribution of the latent variable conditional on the observed data;

f(Y∣θp) is the model (data) likelihood:

f(Y∣θp)=I∏i=1f(Yi∣θp)

f(θp)∼N(0,1) is the prior distribution for the latent variable – θp

parameters {} Blockparameters {

vector[nObs] theta; // the latent variables (one for each person)

vector[nItems] mu; // the item intercepts (one for each item)

vector[nItems] lambda; // the factor loadings/item discriminations (one for each item)

vector<lower=0>[nItems] psi; // the unique standard deviations (one for each item)

}Here, the parameterization of λ (factor loadings / discrimination parameters) can lead to problems in estimation

The issue is λiθp=(−λi)(−θp)

To demonstrate, we will start with different random number seend

Currently use 09102022: works fine

Change to 25102022: big problem

modelCFA_samplesFail = modelCFA_stan$sample( data = modelCFA_data, seed = 25102022, chains = 4, parallel_chains = 4, iter_warmup = 1000, iter_sampling = 2000 )modelCFA_samplesFail = modelCFA_stan$sample( data = modelCFA_data, seed = 25102022, chains = 4, parallel_chains = 4, iter_warmup = 1000, iter_sampling = 2000 )

Convergence fail with maximum of ˆR as:

[1] 1.73562# A tibble: 10 × 10

variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 lambda[1] -0.001 0.01 0.743 1.09 -0.843 0.841 1.73 6.08 106.

2 lambda[2] -0.002 -0.004 0.873 1.29 -0.972 0.969 1.73 6.10 121.

3 lambda[3] -0.002 0.007 0.805 1.19 -0.901 0.899 1.73 6.08 113.

4 lambda[4] -0.001 -0.012 0.846 1.25 -0.945 0.941 1.73 6.09 113.

5 lambda[5] -0.001 -0.002 0.999 1.47 -1.09 1.09 1.73 6.13 122.

6 lambda[6] -0.001 0.008 0.9 1.33 -0.983 0.98 1.73 6.14 103.

7 lambda[7] -0.002 -0.002 0.767 1.13 -0.855 0.85 1.73 6.08 118.

8 lambda[8] -0.001 -0.002 0.855 1.26 -0.931 0.932 1.73 6.09 108.

9 lambda[9] -0.002 0.029 0.863 1.27 -0.963 0.962 1.73 6.12 101.

10 lambda[10] -0.001 -0.003 0.677 0.993 -0.775 0.774 1.73 6.08 105.Unfortunately, we are unable to extract initial values that were generated automatically by cmdstanr. See here for more details.

# A tibble: 177 × 10

variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 theta[1] -0.002 0.001 0.247 0.245 -0.414 0.405 1.00 3167. 4819.

2 theta[2] -0.001 0.004 1.56 2.27 -1.87 1.87 1.73 6.08 104.

3 theta[3] 0 0.029 1.73 2.53 -2.06 2.05 1.73 6.07 105.

4 theta[4] -0.008 0.018 0.962 1.37 -1.26 1.24 1.73 6.07 130.

5 theta[5] 0.001 0 0.247 0.249 -0.403 0.410 1.02 177. 4690.

6 theta[6] -0.004 0.05 1.01 1.45 -1.30 1.30 1.73 6.07 106.

7 theta[7] -0.005 -0.002 0.422 0.503 -0.672 0.656 1.60 6.65 104.

8 theta[8] -0.003 -0.001 0.254 0.248 -0.427 0.417 1.03 112. 3799.

9 theta[9] -0.009 0.015 0.825 1.16 -1.11 1.09 1.73 6.06 113.

10 theta[10] -0.004 -0.004 0.252 0.251 -0.416 0.418 1.02 168. 3352.

11 theta[11] -0.006 -0.055 1.01 1.45 -1.30 1.29 1.73 6.07 99.4

12 theta[12] -0.004 -0.006 0.356 0.395 -0.571 0.578 1.46 7.62 105.

13 theta[13] -0.007 -0.025 0.787 1.11 -1.07 1.06 1.73 6.06 106.

14 theta[14] -0.006 -0.101 1.01 1.45 -1.32 1.29 1.73 6.06 99.0

15 theta[15] -0.005 0.037 0.875 1.24 -1.16 1.14 1.73 6.06 97.9

16 theta[16] -0.004 -0.005 0.259 0.259 -0.434 0.424 1.03 82.2 3490.

17 theta[17] -0.004 -0.006 0.263 0.263 -0.438 0.430 1.03 88.1 4232.

18 theta[18] -0.006 -0.017 0.710 0.985 -0.984 0.984 1.73 6.08 101.

19 theta[19] 0.001 -0.005 1.89 2.77 -2.22 2.23 1.73 6.07 116.

20 theta[20] -0.007 0.072 0.962 1.37 -1.26 1.25 1.74 6.05 110.

21 theta[21] -0.008 0 0.350 0.390 -0.571 0.548 1.45 7.81 104.

22 theta[22] -0.005 -0.051 1.01 1.45 -1.30 1.30 1.73 6.08 109.

23 theta[23] -0.003 0.032 1.01 1.45 -1.30 1.29 1.73 6.06 114.

24 theta[24] -0.008 -0.027 1.01 1.43 -1.30 1.30 1.73 6.06 108.

25 theta[25] -0.005 -0.008 0.602 0.807 -0.883 0.865 1.73 6.07 106.

26 theta[26] -0.003 -0.009 0.779 1.09 -1.07 1.06 1.73 6.07 107.

27 theta[27] -0.002 -0.007 0.276 0.275 -0.452 0.451 1.10 24.5 375.

28 theta[28] -0.002 0.091 1.24 1.80 -1.55 1.55 1.73 6.07 99.7

29 theta[29] -0.004 -0.032 0.928 1.32 -1.23 1.21 1.73 6.06 107.

30 theta[30] -0.001 -0.057 1.70 2.49 -2.03 2.04 1.73 6.07 112.

31 theta[31] -0.003 -0.004 0.602 0.803 -0.877 0.871 1.73 6.09 111.

32 theta[32] -0.003 -0.005 0.487 0.619 -0.743 0.736 1.69 6.24 106.

33 theta[33] -0.003 -0.003 0.412 0.486 -0.654 0.649 1.58 6.79 107.

34 theta[34] -0.007 -0.068 1.01 1.45 -1.30 1.28 1.73 6.07 107.

35 theta[35] -0.007 -0.034 0.710 0.981 -0.991 0.977 1.73 6.08 107.

36 theta[36] -0.006 0.035 0.834 1.17 -1.13 1.10 1.73 6.07 103.

37 theta[37] -0.005 0.031 1.01 1.45 -1.29 1.30 1.73 6.07 109.

38 theta[38] -0.005 -0.002 0.324 0.348 -0.533 0.517 1.36 8.87 128.

39 theta[39] -0.007 -0.072 1.01 1.44 -1.32 1.27 1.73 6.06 102.

40 theta[40] -0.002 -0.003 0.249 0.250 -0.408 0.409 1.02 169. 3659.

41 theta[41] -0.008 0.044 1.01 1.45 -1.30 1.28 1.73 6.08 115.

42 theta[42] -0.005 -0.007 0.265 0.262 -0.437 0.427 1.06 39.6 1019.

43 theta[43] -0.006 -0.014 0.686 0.951 -0.962 0.950 1.73 6.08 108.

44 theta[44] -0.004 -0.003 0.258 0.254 -0.425 0.420 1.04 72.9 3059.

45 theta[45] -0.003 0.001 0.455 0.566 -0.700 0.692 1.67 6.33 110.

46 theta[46] -0.003 -0.007 0.361 0.406 -0.574 0.580 1.49 7.39 107.

47 theta[47] -0.005 -0.027 0.564 0.749 -0.821 0.827 1.73 6.09 107.

48 theta[48] -0.005 -0.021 0.869 1.23 -1.17 1.15 1.73 6.08 111.

49 theta[49] -0.009 -0.033 1.01 1.44 -1.29 1.30 1.73 6.06 101.

50 theta[50] -0.006 -0.008 0.304 0.308 -0.505 0.497 1.21 12.8 142.

51 theta[51] -0.005 0.058 1.01 1.44 -1.31 1.29 1.73 6.07 124.

52 theta[52] -0.006 -0.003 0.408 0.483 -0.654 0.640 1.58 6.75 115.

53 theta[53] -0.008 -0.031 1.01 1.46 -1.31 1.29 1.73 6.06 109.

54 theta[54] -0.001 0.02 1.24 1.80 -1.54 1.53 1.73 6.07 109.

55 theta[55] -0.006 -0.001 1.01 1.45 -1.31 1.30 1.73 6.07 109.

56 theta[56] -0.005 0.06 1.01 1.45 -1.30 1.29 1.73 6.06 121.

57 theta[57] -0.006 -0.001 0.771 1.07 -1.05 1.04 1.73 6.07 108.

58 theta[58] -0.006 0.027 1.01 1.45 -1.31 1.28 1.73 6.06 100.

59 theta[59] -0.003 -0.004 0.263 0.265 -0.434 0.433 1.05 56.3 974.

60 theta[60] -0.005 -0.008 0.686 0.949 -0.958 0.955 1.73 6.08 109.

61 theta[61] -0.002 -0.006 1.41 2.05 -1.71 1.71 1.73 6.07 99.2

62 theta[62] -0.004 -0.012 0.407 0.482 -0.633 0.642 1.60 6.67 111.

63 theta[63] -0.006 0.012 1.01 1.45 -1.32 1.29 1.73 6.07 112.

64 theta[64] -0.004 0.039 1.40 2.04 -1.71 1.70 1.73 6.07 118.

65 theta[65] 0 -0.025 1.24 1.80 -1.53 1.54 1.73 6.07 122.

66 theta[66] -0.006 -0.011 0.788 1.10 -1.08 1.06 1.73 6.06 109.

67 theta[67] -0.005 -0.011 0.604 0.805 -0.881 0.873 1.73 6.09 108.

68 theta[68] -0.005 0.01 0.896 1.26 -1.19 1.18 1.73 6.07 114.

69 theta[69] -0.004 -0.009 0.588 0.792 -0.867 0.846 1.73 6.10 101.

70 theta[70] -0.004 -0.041 0.923 1.31 -1.21 1.21 1.73 6.08 114.

71 theta[71] -0.009 -0.008 0.252 0.250 -0.431 0.404 1.04 69.1 2621.

72 theta[72] -0.004 -0.055 1.08 1.55 -1.39 1.40 1.73 6.07 114.

73 theta[73] -0.004 0 0.247 0.250 -0.411 0.401 1.00 3708. 4860.

74 theta[74] -0.005 0.044 1.01 1.45 -1.31 1.29 1.73 6.07 105.

75 theta[75] -0.006 -0.099 1.01 1.44 -1.31 1.30 1.73 6.06 109.

76 theta[76] 0.003 -0.065 3.27 4.82 -3.64 3.64 1.73 6.08 112.

77 theta[77] -0.002 -0.032 0.826 1.16 -1.11 1.10 1.73 6.07 108.

78 theta[78] -0.001 -0.065 1.18 1.70 -1.48 1.48 1.73 6.07 97.5

79 theta[79] -0.004 0.059 1.89 2.77 -2.23 2.21 1.73 6.08 105.

80 theta[80] -0.003 -0.016 0.666 0.909 -0.944 0.943 1.73 6.08 114.

81 theta[81] -0.006 -0.005 0.253 0.254 -0.426 0.404 1.02 146. 3265.

82 theta[82] -0.004 -0.001 0.249 0.250 -0.411 0.402 1.01 2604. 4613.

83 theta[83] -0.004 0.085 1.01 1.45 -1.31 1.29 1.73 6.06 103.

84 theta[84] -0.001 -0.062 1.60 2.33 -1.92 1.92 1.73 6.07 98.9

85 theta[85] -0.006 -0.007 0.327 0.344 -0.538 0.517 1.33 9.36 125.

86 theta[86] -0.008 -0.021 1.01 1.44 -1.30 1.29 1.74 6.06 107.

87 theta[87] -0.005 -0.006 0.308 0.319 -0.508 0.503 1.23 12.4 164.

88 theta[88] -0.004 0 0.405 0.475 -0.637 0.645 1.57 6.80 103.

89 theta[89] -0.009 -0.054 1.01 1.45 -1.32 1.29 1.73 6.07 115.

90 theta[90] -0.005 0.045 0.880 1.24 -1.18 1.16 1.73 6.07 116.

91 theta[91] -0.004 0.034 0.872 1.24 -1.16 1.14 1.73 6.06 106.

92 theta[92] -0.002 -0.04 1.09 1.56 -1.39 1.39 1.73 6.05 106.

93 theta[93] -0.007 -0.012 0.247 0.249 -0.412 0.399 1.00 3345. 4727.

94 theta[94] -0.003 0.073 2.61 3.84 -2.96 2.96 1.73 6.09 115.

95 theta[95] -0.003 -0.231 1.68 2.46 -2.00 2.00 1.73 6.09 112.

96 theta[96] -0.003 -0.005 0.408 0.484 -0.639 0.636 1.60 6.67 121.

97 theta[97] -0.001 -0.007 0.364 0.407 -0.581 0.592 1.48 7.44 100.

98 theta[98] -0.007 0.089 1.01 1.44 -1.30 1.30 1.73 6.06 124.

99 theta[99] -0.001 0.08 1.24 1.79 -1.54 1.55 1.73 6.07 110.

100 theta[100] -0.003 0.129 0.958 1.37 -1.25 1.24 1.73 6.07 109.

101 theta[101] -0.006 -0.011 0.751 1.05 -1.03 1.02 1.73 6.06 104.

102 theta[102] 0.001 0.033 2.83 4.17 -3.18 3.19 1.73 6.08 96.6

103 theta[103] -0.005 0.01 0.709 0.981 -0.988 0.968 1.73 6.09 101.

104 theta[104] -0.004 -0.037 0.900 1.27 -1.20 1.20 1.73 6.07 110.

105 theta[105] -0.006 -0.007 0.266 0.261 -0.436 0.434 1.06 40.5 2806.

106 theta[106] -0.003 0.035 1.16 1.67 -1.46 1.46 1.73 6.07 108.

107 theta[107] -0.005 0.021 1.60 2.34 -1.91 1.91 1.73 6.07 106.

108 theta[108] -0.001 -0.016 0.879 1.24 -1.17 1.17 1.73 6.09 106.

109 theta[109] -0.003 -0.197 1.07 1.54 -1.36 1.36 1.73 6.06 112.

110 theta[110] -0.006 -0.004 0.255 0.255 -0.426 0.408 1.02 179. 3672.

111 theta[111] -0.004 -0.008 0.276 0.276 -0.460 0.449 1.12 20.8 196.

112 theta[112] -0.006 -0.028 0.685 0.945 -0.962 0.951 1.73 6.07 96.1

113 theta[113] -0.002 -0.011 0.449 0.557 -0.682 0.693 1.66 6.39 104.

114 theta[114] -0.004 -0.001 0.403 0.476 -0.645 0.630 1.57 6.80 114.

115 theta[115] -0.004 0.013 0.903 1.27 -1.21 1.20 1.73 6.09 110.

116 theta[116] -0.002 0.001 0.297 0.306 -0.494 0.480 1.24 12.0 115.

117 theta[117] -0.002 -0.006 0.254 0.255 -0.418 0.416 1.04 71.7 3080.

118 theta[118] -0.006 -0.007 0.409 0.482 -0.651 0.637 1.60 6.67 106.

119 theta[119] -0.007 -0.038 0.873 1.23 -1.17 1.16 1.74 6.07 117.

120 theta[120] -0.006 -0.059 1.01 1.45 -1.31 1.28 1.73 6.07 113.

121 theta[121] -0.005 -0.002 0.323 0.333 -0.536 0.521 1.31 9.80 120.

122 theta[122] -0.003 -0.005 0.683 0.941 -0.952 0.952 1.73 6.06 108.

123 theta[123] -0.002 0.031 1.24 1.80 -1.54 1.53 1.73 6.08 120.

124 theta[124] -0.002 0.003 1.07 1.53 -1.36 1.35 1.73 6.06 108.

125 theta[125] -0.006 -0.009 1.01 1.44 -1.30 1.29 1.73 6.06 103.

126 theta[126] -0.009 0.004 0.687 0.945 -0.966 0.949 1.73 6.08 101.

127 theta[127] -0.003 -0.031 1.16 1.67 -1.46 1.46 1.73 6.08 114.

128 theta[128] -0.008 0.037 0.883 1.25 -1.17 1.16 1.74 6.06 103.

129 theta[129] -0.009 0.003 0.706 0.981 -0.986 0.964 1.73 6.06 107.

130 theta[130] 0 0.033 1.88 2.75 -2.22 2.21 1.73 6.07 107.

131 theta[131] -0.004 0.002 0.419 0.507 -0.660 0.651 1.62 6.58 113.

132 theta[132] 0 -0.095 1.68 2.46 -2.01 1.99 1.73 6.08 110.

133 theta[133] -0.008 -0.135 1.01 1.45 -1.32 1.30 1.73 6.06 103.

134 theta[134] 0.001 -0.084 2.61 3.84 -2.95 2.96 1.73 6.08 118.

135 theta[135] -0.005 0.009 0.826 1.17 -1.11 1.11 1.73 6.07 113.

136 theta[136] -0.007 0.037 1.01 1.45 -1.30 1.30 1.73 6.06 109.

137 theta[137] -0.002 0.012 0.816 1.15 -1.10 1.10 1.73 6.06 108.

138 theta[138] -0.003 0.038 0.826 1.17 -1.11 1.11 1.74 6.05 108.

139 theta[139] -0.004 -0.004 0.256 0.253 -0.425 0.411 1.02 166. 4446.

140 theta[140] -0.004 -0.002 0.249 0.246 -0.416 0.402 1.03 111. 3771.

141 theta[141] -0.004 0.004 0.872 1.24 -1.17 1.15 1.73 6.07 104.

142 theta[142] -0.007 -0.107 1.01 1.45 -1.31 1.29 1.73 6.06 103.

143 theta[143] -0.005 0.023 1.01 1.44 -1.29 1.31 1.73 6.07 113.

144 theta[144] -0.001 0.035 0.855 1.21 -1.14 1.13 1.73 6.06 104.

145 theta[145] -0.008 0.009 1.01 1.44 -1.31 1.29 1.73 6.07 109.

146 theta[146] -0.004 0.007 0.663 0.910 -0.932 0.930 1.73 6.07 120.

147 theta[147] 0.001 -0.001 0.894 1.27 -1.19 1.18 1.73 6.07 103.

148 theta[148] -0.002 -0.005 0.279 0.280 -0.455 0.460 1.13 19.9 279.

149 theta[149] -0.006 -0.05 1.01 1.44 -1.30 1.31 1.73 6.07 108.

150 theta[150] -0.009 -0.135 1.01 1.46 -1.30 1.28 1.73 6.07 102.

151 theta[151] -0.006 -0.029 0.689 0.947 -0.969 0.957 1.73 6.07 105.

152 theta[152] -0.005 -0.024 1.01 1.44 -1.31 1.30 1.73 6.07 100.

153 theta[153] -0.001 0.011 1.55 2.26 -1.87 1.87 1.73 6.07 116.

154 theta[154] -0.007 -0.019 0.960 1.37 -1.25 1.24 1.73 6.07 114.

155 theta[155] -0.008 0.114 1.01 1.45 -1.29 1.29 1.73 6.07 100.

156 theta[156] -0.006 -0.071 0.870 1.24 -1.16 1.15 1.73 6.07 102.

157 theta[157] -0.008 -0.011 0.869 1.24 -1.16 1.14 1.73 6.07 114.

158 theta[158] -0.005 -0.004 0.248 0.248 -0.412 0.403 1.00 4189. 4432.

159 theta[159] -0.004 0 0.664 0.916 -0.940 0.934 1.73 6.07 101.

160 theta[160] -0.008 -0.003 1.01 1.45 -1.30 1.29 1.73 6.08 104.

161 theta[161] -0.002 -0.087 1.33 1.92 -1.63 1.62 1.73 6.07 118.

162 theta[162] -0.003 -0.005 0.307 0.314 -0.500 0.509 1.24 11.8 133.

163 theta[163] -0.007 -0.068 1.01 1.45 -1.30 1.29 1.73 6.08 105.

164 theta[164] -0.005 -0.01 0.595 0.798 -0.864 0.869 1.73 6.08 102.

165 theta[165] -0.004 0 0.308 0.316 -0.517 0.491 1.21 12.9 142.

166 theta[166] -0.007 -0.005 1.01 1.45 -1.32 1.29 1.73 6.07 120.

167 theta[167] -0.004 -0.005 0.410 0.484 -0.651 0.639 1.58 6.77 105.

168 theta[168] -0.001 -0.069 1.40 2.04 -1.71 1.73 1.73 6.07 112.

169 theta[169] -0.005 -0.008 0.428 0.515 -0.674 0.655 1.64 6.48 100.

170 theta[170] -0.006 -0.005 0.273 0.271 -0.459 0.445 1.13 20.3 244.

171 theta[171] -0.007 -0.005 0.789 1.11 -1.09 1.06 1.73 6.09 102.

172 theta[172] -0.003 -0.007 0.318 0.333 -0.516 0.525 1.32 9.58 128.

173 theta[173] -0.003 0.016 0.663 0.911 -0.941 0.930 1.73 6.07 112.

174 theta[174] -0.004 0.027 0.747 1.04 -1.04 1.02 1.73 6.05 98.2

175 theta[175] -0.004 -0.009 0.707 0.982 -0.980 0.967 1.73 6.09 99.9

176 theta[176] -0.007 -0.012 0.827 1.17 -1.11 1.11 1.73 6.07 104.

177 theta[177] -0.005 -0.006 0.501 0.642 -0.760 0.753 1.70 6.17 106. Stan allows starting values to be set via cmdstanr

Alternatively:

In Stan file, restrict λ to be positive: vector<lower=0>[nItems] lambda;

Can also choose prior that has strictly positive range (like log-normal distribution)

Note: the restriction on the space of λ will not permit truely negative values

parameters {

vector[nObs] theta; // the latent variables (one for each person)

vector[nItems] mu; // the item intercepts (one for each item)

vector<lower=0>[nItems] lambda; // the factor loadings/item discriminations (one for each item)

vector<lower=0>[nItems] psi; // the unique standard deviations (one for each item)

}StanStarting values (initial values) are the first values used when an MCMC chain starts

In Stan, by default, parameters are randomly started between -2 and 2

What we need:

Randomly start all λ parameters so that they converge to the λiθp mode

As opposed to the (-λi)(-θp) mode

cmdstanr Syntax for Initial ValuesAdd the init option to the $sample() function of the cmdstanr object:

The init option can be specified as a function, here, randomly starting each λ following a normal distribution

See the lecture R syntax for information on how to confirm starting values are set.

You should find the initial values using $init() function

[[1]]

[[1]]$lambda

[1] 7.505537 8.652382 13.870335 11.813123 11.473054 10.293251 10.566242

[8] 11.178553 10.431699 10.310106

[[2]]

[[2]]$lambda

[1] 11.907653 9.668295 10.510007 8.205055 13.167940 12.785517 6.375484

[8] 9.567361 11.099712 10.965472

[[3]]

[[3]]$lambda

[1] 11.521697 9.108684 7.589333 10.602933 6.921710 11.270741 11.405904

[8] 6.188234 11.877843 9.551016

[[4]]

[[4]]$lambda

[1] 8.652366 10.891575 12.561234 13.130260 7.598908 9.124610 10.292763

[8] 10.132038 9.109928 5.315775[1] 1.006357# A tibble: 30 × 10

variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 mu[1] 2.37 2.37 0.0866 0.0851 2.22 2.51 1.00 1317. 3146.

2 mu[2] 1.95 1.95 0.0857 0.0846 1.81 2.09 1.01 908. 2329.

3 mu[3] 1.87 1.87 0.0839 0.0848 1.74 2.01 1.00 1111. 3011.

4 mu[4] 2.01 2.01 0.0847 0.0844 1.87 2.15 1.00 1040. 2582.

5 mu[5] 1.98 1.98 0.0859 0.0868 1.84 2.12 1.01 790. 2080.

6 mu[6] 1.89 1.89 0.0766 0.0768 1.77 2.02 1.01 750. 2168.

7 mu[7] 1.72 1.72 0.0767 0.0740 1.59 1.85 1.01 1000. 2708.

8 mu[8] 1.84 1.84 0.0723 0.0719 1.72 1.96 1.01 775. 2012.

9 mu[9] 1.80 1.81 0.0863 0.0854 1.66 1.95 1.00 1010. 2552.

10 mu[10] 1.52 1.52 0.0807 0.0805 1.39 1.65 1.00 1428. 3074.

11 lambda[1] 0.738 0.737 0.0823 0.0817 0.606 0.877 1.00 3138. 5274.

12 lambda[2] 0.87 0.867 0.0743 0.0743 0.752 0.993 1.00 1903. 3675.

13 lambda[3] 0.802 0.8 0.0777 0.0765 0.678 0.934 1.00 2452. 4289.

14 lambda[4] 0.843 0.839 0.0765 0.0750 0.723 0.971 1.00 2267. 4160.

15 lambda[5] 0.997 0.995 0.0700 0.0687 0.886 1.12 1.00 1393. 3095.

16 lambda[6] 0.899 0.896 0.0637 0.0646 0.800 1.01 1.00 1439. 3092.

17 lambda[7] 0.765 0.764 0.0689 0.0701 0.654 0.879 1.00 2213. 4109.

18 lambda[8] 0.853 0.851 0.0611 0.0612 0.755 0.957 1.00 1443. 2821.

19 lambda[9] 0.861 0.858 0.0784 0.0785 0.736 0.996 1.00 2267. 4043.

20 lambda[10] 0.673 0.672 0.0773 0.0764 0.549 0.800 1.00 3313. 4230.

21 psi[1] 0.891 0.889 0.0494 0.0491 0.814 0.976 1.00 15504. 6185.

22 psi[2] 0.735 0.733 0.0433 0.0436 0.667 0.809 1.00 11583. 5925.

23 psi[3] 0.782 0.78 0.0443 0.0442 0.712 0.858 1.00 13289. 6547.

24 psi[4] 0.757 0.755 0.0445 0.0443 0.687 0.833 1.00 12802. 6653.

25 psi[5] 0.545 0.544 0.0366 0.0369 0.486 0.607 1.00 7541. 6505.

26 psi[6] 0.505 0.503 0.0336 0.0334 0.452 0.563 1.00 9362. 6500.

27 psi[7] 0.686 0.685 0.0404 0.0401 0.623 0.755 1.00 14624. 6491.

28 psi[8] 0.48 0.479 0.0318 0.0315 0.430 0.534 1.00 9793. 7087.

29 psi[9] 0.781 0.779 0.0458 0.0455 0.708 0.858 1.00 11809. 7030.

30 psi[10] 0.839 0.837 0.0467 0.0450 0.766 0.920 1.00 15303. 5988.blavaanblavaan.model <- ' theta =~ item1 + item2 + item3 + item4 + item5 + item6 + item7 + item8 + item9 + item10 '

model01_blv <- bcfa(blavaan.model, data=conspiracyItems,

n.chains = 4, burnin = 1000, sample = 2000,

target = 'stan', seed = 09102022,

save.lvs = TRUE, # save sampled latent variable

std.lv = TRUE,

bcontrol = list(cores = 4),

mcmcfile=TRUE # save Stan file

) # standardized latent variableblavaan Resultsblavaan 0.5.3 ended normally after 2000 iterations

Estimator BAYES

Optimization method MCMC

Number of model parameters 20

Number of observations 177

Statistic MargLogLik PPP

Value -2153.697 0.000

Parameter Estimates:

Latent Variables:

Estimate Post.SD pi.lower pi.upper Rhat Prior

theta =~

item1 0.738 0.082 0.581 0.902 1.000 normal(0,10)

item2 0.869 0.077 0.726 1.027 1.001 normal(0,10)

item3 0.802 0.076 0.659 0.955 1.001 normal(0,10)

item4 0.842 0.078 0.696 1.002 1.001 normal(0,10)

item5 0.997 0.071 0.867 1.144 1.001 normal(0,10)

item6 0.899 0.064 0.780 1.033 1.001 normal(0,10)

item7 0.764 0.069 0.634 0.911 1.001 normal(0,10)

item8 0.852 0.061 0.739 0.976 1.001 normal(0,10)

item9 0.859 0.078 0.709 1.018 1.001 normal(0,10)

item10 0.673 0.076 0.528 0.826 1.000 normal(0,10)

Variances:

Estimate Post.SD pi.lower pi.upper Rhat Prior

.item1 0.791 0.088 0.636 0.976 1.000 gamma(1,.5)[sd]

.item2 0.538 0.064 0.426 0.677 1.000 gamma(1,.5)[sd]

.item3 0.608 0.071 0.481 0.760 1.000 gamma(1,.5)[sd]

.item4 0.571 0.068 0.454 0.716 1.000 gamma(1,.5)[sd]

.item5 0.296 0.040 0.224 0.385 1.000 gamma(1,.5)[sd]

.item6 0.254 0.034 0.193 0.327 1.000 gamma(1,.5)[sd]

.item7 0.469 0.055 0.374 0.587 1.000 gamma(1,.5)[sd]

.item8 0.230 0.030 0.177 0.293 1.000 gamma(1,.5)[sd]

.item9 0.609 0.073 0.484 0.767 1.000 gamma(1,.5)[sd]

.item10 0.701 0.079 0.562 0.871 1.000 gamma(1,.5)[sd]

theta 1.000 You can find more details in the Edgar Merkle’s paper.

Very unreadable Stan file (1891 lines)

limits in setting up prior distributions (cannot change distribution family for lambda and psi)

Use rstan rather than cmdstanr

/* This file is based on LERSIL.stan by Ben Goodrich.

https://github.com/bgoodri/LERSIL */

functions { // you can use these in R following `rstan::expose_stan_functions("foo.stan")`

// mimics lav_mvnorm_cluster_implied22l():

matrix calc_W_tilde(matrix sigma_w, vector mu_w, array[] int var1_idx, int p_tilde) {

matrix[p_tilde, p_tilde + 1] out = rep_matrix(0, p_tilde, p_tilde + 1); // first column is mean vector

vector[p_tilde] mu1 = rep_vector(0, p_tilde);

matrix[p_tilde, p_tilde] sig1 = rep_matrix(0, p_tilde, p_tilde);

mu1[var1_idx] = mu_w;

sig1[var1_idx, var1_idx] = sigma_w;

out = append_col(mu1, sig1);

return out;

}

matrix calc_B_tilde(matrix sigma_b, vector mu_b, array[] int var2_idx, int p_tilde) {

matrix[p_tilde, p_tilde + 1] out = rep_matrix(0, p_tilde, p_tilde + 1);

vector[p_tilde] mu2 = rep_vector(0, p_tilde);

matrix[p_tilde, p_tilde] sig2 = rep_matrix(0, p_tilde, p_tilde);

mu2[var2_idx] = mu_b;

sig2[var2_idx, var2_idx] = sigma_b;

out = append_col(mu2, sig2);

return out;

}

vector twolevel_logdens(array[] vector mean_d, array[] matrix cov_d, matrix S_PW, array[] vector YX, array[] int nclus, array[] int clus_size, array[] int clus_sizes, int nclus_sizes, array[] int clus_size_ns, vector impl_Muw, matrix impl_Sigmaw, vector impl_Mub, matrix impl_Sigmab, array[] int ov_idx1, array[] int ov_idx2, array[] int within_idx, array[] int between_idx, array[] int both_idx, int p_tilde, int N_within, int N_between, int N_both){

matrix[p_tilde, p_tilde + 1] W_tilde;

matrix[p_tilde, p_tilde] W_tilde_cov;

matrix[p_tilde, p_tilde + 1] B_tilde;

matrix[p_tilde, p_tilde] B_tilde_cov;

vector[p_tilde] Mu_WB_tilde;

vector[N_between] Mu_z;

int N_wo_b = p_tilde - N_between;

vector[N_wo_b] Mu_y;

vector[N_wo_b] Mu_w;

vector[N_wo_b] Mu_b;

vector[N_between + N_wo_b] Mu_full;

matrix[N_between, N_between] Sigma_zz;

matrix[N_between, N_between] Sigma_zz_inv;

real Sigma_zz_ld;

matrix[N_wo_b, N_between] Sigma_yz;

matrix[N_wo_b, N_between] Sigma_yz_zi;

matrix[N_wo_b, N_wo_b] Sigma_b;

matrix[N_wo_b, N_wo_b] Sigma_b_z;

matrix[N_wo_b, N_wo_b] Sigma_w;

matrix[N_wo_b, N_wo_b] Sigma_w_inv;

real Sigma_w_ld;

matrix[N_wo_b, N_wo_b] Sigma_j;

matrix[N_wo_b, N_wo_b] Sigma_j_inv;

matrix[N_wo_b, N_between] Sigma_ji_yz_zi;

matrix[N_between, N_between] Vinv_11;

real Sigma_j_ld;

vector[nclus_sizes] L;

vector[nclus_sizes] B;

array[N_between] int bidx;

array[p_tilde - N_between] int notbidx;

real q_zz;

real q_yz;

real q_yyc;

vector[nclus_sizes] P;

vector[nclus_sizes] q_W;

vector[nclus_sizes] L_W;

vector[nclus_sizes] loglik;

vector[nclus_sizes] nperclus = to_vector(clus_sizes) .* to_vector(clus_size_ns);

int cluswise = 0;

int r1 = 1; // running index of rows of YX corresponding to each cluster

// 1. compute necessary vectors/matrices, like lav_mvnorm_cluster_implied22l() of lav_mvnorm_cluster.R

if (nclus[2] == nclus_sizes) cluswise = 1;

W_tilde = calc_W_tilde(impl_Sigmaw, impl_Muw, ov_idx1, p_tilde);

W_tilde_cov = block(W_tilde, 1, 2, p_tilde, p_tilde);

B_tilde = calc_B_tilde(impl_Sigmab, impl_Mub, ov_idx2, p_tilde);

B_tilde_cov = block(B_tilde, 1, 2, p_tilde, p_tilde);

Mu_WB_tilde = rep_vector(0, p_tilde);

if (N_within > 0) {

for (i in 1:N_within) {

Mu_WB_tilde[within_idx[i]] = W_tilde[within_idx[i], 1];

B_tilde[within_idx[i], 1] = 0;

}

}

if (N_both > 0) {

for (i in 1:N_both) {

Mu_WB_tilde[both_idx[i]] = B_tilde[both_idx[i], 1] + W_tilde[both_idx[i], 1];

}

}

// around line 71 of lav_mvnorm_cluster.R

if (N_between > 0) {

bidx = between_idx[1:N_between];

notbidx = between_idx[(N_between + 1):p_tilde];

Mu_z = to_vector(B_tilde[bidx, 1]);

Mu_y = Mu_WB_tilde[notbidx];

Mu_w = to_vector(W_tilde[notbidx, 1]);

Mu_b = to_vector(B_tilde[notbidx, 1]);

Sigma_zz = B_tilde_cov[bidx, bidx];

Sigma_yz = B_tilde_cov[notbidx, bidx];

Sigma_b = B_tilde_cov[notbidx, notbidx];

Sigma_w = W_tilde_cov[notbidx, notbidx];

} else {

Mu_y = Mu_WB_tilde;

Mu_w = W_tilde[,1];

Mu_b = B_tilde[,1];

Sigma_b = B_tilde_cov;

Sigma_w = W_tilde_cov;

}

// 2. compute lpdf, around line 203 of lav_mvnorm_cluster

Sigma_w_inv = inverse_spd(Sigma_w);

Sigma_w_ld = log_determinant(Sigma_w);

if (N_between > 0) {

Sigma_zz_inv = inverse_spd(Sigma_zz);

Sigma_zz_ld = log_determinant(Sigma_zz);

Sigma_yz_zi = Sigma_yz * Sigma_zz_inv;

Sigma_b_z = Sigma_b - Sigma_yz * Sigma_yz_zi';

} else {

Sigma_zz_ld = 0;

Sigma_b_z = Sigma_b;

}

Mu_full = append_row(Mu_z, Mu_y);

for (clz in 1:nclus_sizes) {

int nj = clus_sizes[clz];

matrix[N_between + N_wo_b, N_between + N_wo_b] Y2Yc = crossprod(to_matrix(mean_d[clz] - Mu_full)');

matrix[N_between, N_between] Y2Yc_zz;

matrix[N_wo_b, N_between] Y2Yc_yz;

matrix[N_wo_b, N_wo_b] Y2Yc_yy;

array[N_between] int uord_bidx;

array[N_wo_b] int uord_notbidx;

if (!cluswise) Y2Yc += cov_d[clz]; // variability between clusters of same size, will always equal 0 for clusterwise

if (N_between > 0) {

for (k in 1:N_between) {

uord_bidx[k] = k;

}

if (N_wo_b > 0) {

for (k in 1:N_wo_b) {

uord_notbidx[k] = N_between + k;

}

}

Y2Yc_zz = Y2Yc[uord_bidx, uord_bidx];

Y2Yc_yz = Y2Yc[uord_notbidx, uord_bidx];

Y2Yc_yy = Y2Yc[uord_notbidx, uord_notbidx];

} else {

Y2Yc_yy = Y2Yc;

}

Sigma_j = (nj * Sigma_b_z) + Sigma_w;

Sigma_j_inv = inverse_spd(Sigma_j); // FIXME npd exceptions for within-only

Sigma_j_ld = log_determinant(Sigma_j);

L[clz] = Sigma_zz_ld + Sigma_j_ld;

if (N_between > 0) {

Sigma_ji_yz_zi = Sigma_j_inv * Sigma_yz_zi;

Vinv_11 = Sigma_zz_inv + nj * (Sigma_yz_zi' * Sigma_ji_yz_zi);

q_zz = sum(Vinv_11 .* Y2Yc_zz);

q_yz = -nj * sum(Sigma_ji_yz_zi .* Y2Yc_yz);

} else {

q_zz = 0;

q_yz = 0;

}

q_yyc = -nj * sum(Sigma_j_inv .* Y2Yc_yy);

B[clz] = q_zz + 2 * q_yz - q_yyc;

if (cluswise) {

matrix[N_wo_b, nj] Y_j;

if (N_between > 0) {

for (i in 1:nj) {

Y_j[, i] = YX[r1 - 1 + i, notbidx] - mean_d[clz, uord_notbidx]; // would be nice to have to_matrix() here

}

} else {

for (i in 1:nj) {

Y_j[, i] = YX[r1 - 1 + i] - mean_d[clz]; // would be nice to have to_matrix() here

}

}

r1 += nj; // for next iteration through loop

q_W[clz] = sum(Sigma_w_inv .* tcrossprod(Y_j));

}

}

if (!cluswise) {

q_W = (nperclus - to_vector(clus_size_ns)) * sum(Sigma_w_inv .* S_PW);

}

L_W = (nperclus - to_vector(clus_size_ns)) * Sigma_w_ld;

loglik = -.5 * ((L .* to_vector(clus_size_ns)) + (B .* to_vector(clus_size_ns)) + q_W + L_W);

// add constant, line 300 lav_mvnorm_cluster

P = nperclus * (N_within + N_both) + to_vector(clus_size_ns) * N_between;

loglik += -.5 * (P * log(2 * pi()));

return loglik;

}

/*

Fills in the elements of a coefficient matrix containing some mix of

totally free, free subject to a sign constraint, and fixed elements

@param free_elements vector of unconstrained elements

@param skeleton matrix of the same dimensions as the output whose elements are

positive_infinity(): if output element is totally free

other: if output element is fixed to that number

@return matrix of coefficients

*/

matrix fill_matrix(vector free_elements, matrix skeleton, array[,] int eq_skeleton, int pos_start, int spos_start) {

int R = rows(skeleton);

int C = cols(skeleton);

matrix[R, C] out;

int pos = spos_start; // position of eq_skeleton

int freepos = pos_start; // position of free_elements

int eqelem = 0;

for (c in 1:C) for (r in 1:R) {

real rc = skeleton[r, c];

if (is_inf(rc)) { // free

int eq = eq_skeleton[pos, 1];

int wig = eq_skeleton[pos, 3];

if (eq == 0 || wig == 1) {

out[r,c] = free_elements[freepos];

freepos += 1;

} else {

eqelem = eq_skeleton[pos, 2];

out[r,c] = free_elements[eqelem];

}

pos += 1;

} else out[r,c] = skeleton[r, c]; // fixed, so do not bump pos

}

return out;

}

vector fill_prior(vector free_elements, array[] real pri_mean, array[,] int eq_skeleton) {

int R = dims(eq_skeleton)[1];

int eqelem = 0;

int pos = 1;

vector[num_elements(pri_mean)] out;

for (r in 1:R) {

if (pos <= num_elements(pri_mean)) {

int eq = eq_skeleton[r, 1];

int wig = eq_skeleton[r, 3];

if (eq == 0) {

out[pos] = pri_mean[pos];

pos += 1;

} else if (wig == 1) {

eqelem = eq_skeleton[r, 2];

out[pos] = free_elements[eqelem];

pos += 1;

}

}

}

return out;

}

/*

* This is a bug-free version of csr_to_dense_matrix and has the same arguments

*/

matrix to_dense_matrix(int m, int n, vector w, array[] int v, array[] int u) {

matrix[m, n] out = rep_matrix(0, m, n);

int pos = 1;

for (i in 1:m) {

int start = u[i];

int nnz = u[i + 1] - start;

for (j in 1:nnz) {

out[i, v[pos]] = w[pos];

pos += 1;

}

}

return out;

}

// sign function

int sign(real x) {

if (x > 0)

return 1;

else

return -1;

}

// sign-constrain a vector of loadings

vector sign_constrain_load(vector free_elements, int npar, array[,] int sign_mat) {

vector[npar] out;

for (i in 1:npar) {

if (sign_mat[i,1]) {

int lookupval = sign_mat[i,2];

if (free_elements[lookupval] < 0) {

out[i] = -free_elements[i];

} else {

out[i] = free_elements[i];

}

} else {

out[i] = free_elements[i];

}

}

return out;

}

// sign-constrain a vector of regressions or covariances

vector sign_constrain_reg(vector free_elements, int npar, array[,] int sign_mat, vector load_par1, vector load_par2) {

vector[npar] out;

for (i in 1:npar) {

if (sign_mat[i,1]) {

int lookupval1 = sign_mat[i,2];

int lookupval2 = sign_mat[i,3];

if (sign(load_par1[lookupval1]) * sign(load_par2[lookupval2]) < 0) {

out[i] = -free_elements[i];

} else {

out[i] = free_elements[i];

}

} else {

out[i] = free_elements[i];

}

}

return out;

}

// obtain covariance parameter vector for correlation/sd matrices

vector cor2cov(array[] matrix cormat, array[] matrix sdmat, int num_free_elements, array[] matrix matskel, array[,] int wskel, int ngrp) {

vector[num_free_elements] out;

int R = rows(to_matrix(cormat[1]));

int pos = 1; // position of eq_skeleton

int freepos = 1; // position of free_elements

for (g in 1:ngrp) {

for (c in 1:(R-1)) for (r in (c+1):R) {

if (is_inf(matskel[g,r,c])) {

if (wskel[pos,1] == 0) {

out[freepos] = sdmat[g,r,r] * sdmat[g,c,c] * cormat[g,r,c];

freepos += 1;

}

pos += 1;

}

}

}

return out;

}

// E step of EM algorithm on latent continuous space

array[] matrix estep(array[] vector YXstar, array[] vector Mu, array[] matrix Sigma, array[] int Nobs, array[,] int Obsvar, array[] int startrow, array[] int endrow, array[] int grpnum, int Np, int Ng) {

int p = dims(YXstar)[2];

array[Ng] matrix[p, p + 1] out; //mean vec + cov mat

matrix[dims(YXstar)[1], p] YXfull; // columns consistenly ordered

matrix[p, p] T2pat;

array[p] int obsidx;

int r1;

int r2;

int grpidx;

int Nmis;

for (g in 1:Ng) {

out[g] = rep_matrix(0, p, p + 1);

}

for (mm in 1:Np) {

obsidx = Obsvar[mm,];

r1 = startrow[mm];

r2 = endrow[mm];

grpidx = grpnum[mm];

Nmis = p - Nobs[mm];

if (Nobs[mm] < p) {

matrix[Nobs[mm], Nobs[mm]] Sig22 = Sigma[grpidx, obsidx[1:Nobs[mm]], obsidx[1:Nobs[mm]]];

matrix[Nmis, Nmis] Sig11 = Sigma[grpidx, obsidx[(Nobs[mm] + 1):p], obsidx[(Nobs[mm] + 1):p]];

matrix[Nmis, Nobs[mm]] Sig12 = Sigma[grpidx, obsidx[(Nobs[mm] + 1):p], obsidx[1:Nobs[mm]]];

matrix[Nobs[mm], Nobs[mm]] S22inv = inverse_spd(Sig22);

matrix[Nmis, Nmis] T2p11 = Sig11 - (Sig12 * S22inv * Sig12');

// partition into observed/missing, compute Sigmas, add to out

for (jj in r1:r2) {

vector[Nmis] ymis;

ymis = Mu[grpidx, obsidx[(Nobs[mm] + 1):p]] + (Sig12 * S22inv * (YXstar[jj, 1:Nobs[mm]] - Mu[grpidx, obsidx[1:Nobs[mm]]]));

for (kk in 1:Nobs[mm]) {

YXfull[jj, obsidx[kk]] = YXstar[jj, kk];

}

for (kk in (Nobs[mm] + 1):p) {

YXfull[jj, obsidx[kk]] = ymis[kk - Nobs[mm]];

}

}

T2pat = crossprod(YXfull[r1:r2,]);

// correction for missing cells/conditional covariances

for (jj in 1:Nmis) {

for (kk in jj:Nmis) {

T2pat[obsidx[Nobs[mm] + jj], obsidx[Nobs[mm] + kk]] = T2pat[obsidx[Nobs[mm] + jj], obsidx[Nobs[mm] + kk]] + (r2 - r1 + 1) * T2p11[jj, kk];

if (kk > jj) {

T2pat[obsidx[Nobs[mm] + kk], obsidx[Nobs[mm] + jj]] = T2pat[obsidx[Nobs[mm] + jj], obsidx[Nobs[mm] + kk]];

}

}

}

} else {

// complete data

for (jj in r1:r2) {

for (kk in 1:Nobs[mm]) {

YXfull[jj, obsidx[kk]] = YXstar[jj, kk];

}

}

T2pat = crossprod(YXfull[r1:r2,]);

}

for (i in 1:p) {

out[grpidx,i,1] += sum(YXfull[r1:r2,i]);

}

out[grpidx,,2:(p+1)] += T2pat;

}

return out;

}

matrix sig_inv_update(matrix Sigmainv, array[] int obsidx, int Nobs, int np, real logdet) {

matrix[Nobs + 1, Nobs + 1] out = rep_matrix(0, Nobs + 1, Nobs + 1);

int nrm = np - Nobs;

matrix[nrm, nrm] H;

matrix[nrm, Nobs] A;

if (nrm == 0) {

out[1:Nobs, 1:Nobs] = Sigmainv;

out[Nobs + 1, Nobs + 1] = logdet;

} else {

H = Sigmainv[obsidx[(Nobs + 1):np], obsidx[(Nobs + 1):np]];

A = Sigmainv[obsidx[(Nobs + 1):np], obsidx[1:Nobs]];

out[1:Nobs, 1:Nobs] = Sigmainv[obsidx[1:Nobs], obsidx[1:Nobs]] - A' * mdivide_left_spd(H, A);

out[Nobs + 1, Nobs + 1] = logdet + log_determinant(H);

}

return out;

}

real multi_normal_suff(vector xbar, matrix S, vector Mu, matrix Supdate, int N) {

int Nobs = dims(S)[1];

real out;

// using elementwise multiplication + sum here for efficiency

out = -.5 * N * ( sum(Supdate[1:Nobs, 1:Nobs] .* (S + (xbar - Mu) * (xbar - Mu)')) + Supdate[Nobs + 1, Nobs + 1] + Nobs * log(2 * pi()) );

if(is_nan(out) || out == positive_infinity()) out = negative_infinity();

return out;

}

// compute mean vectors and cov matrices for a single group (two-level models)

array[] vector calc_mean_vecs(array[] vector YXstar, array[] vector mean_d, array[] int nclus, array[] int Xvar, array[] int Xbetvar, int Nx, int Nx_between, int p_tilde) {

vector[Nx] ov_mean = rep_vector(0, Nx);

vector[Nx_between] ov_mean_d = rep_vector(0, Nx_between);

int nr = dims(YXstar)[1];

array[2] vector[p_tilde] out;

for (i in 1:2) out[i] = rep_vector(0, p_tilde);

if (Nx > 0) {

for (i in 1:nr) {

ov_mean += YXstar[i, Xvar[1:Nx]];

}

ov_mean *= pow(nclus[1], -1);

out[1, 1:Nx] = ov_mean;

}

if (Nx_between > 0) {

for (cc in 1:nclus[2]) {

ov_mean_d += mean_d[cc, 1:Nx_between];

}

ov_mean_d *= pow(nclus[2], -1);

out[2, 1:Nx_between] = ov_mean_d;

}

return out;

}

array[] matrix calc_cov_mats(array[] vector YXstar, array[] vector mean_d, array[] vector mean_vecs, array[] int nclus, array[] int Xvar, array[] int Xbetvar, int Nx, int Nx_between, int p_tilde) {

matrix[Nx_between, Nx_between] cov_mean_d = rep_matrix(0, Nx_between, Nx_between);

matrix[Nx, Nx] cov_w = rep_matrix(0, Nx, Nx);

matrix[Nx, Nx] cov_w_inv;

int nr = dims(YXstar)[1];

array[3] matrix[p_tilde, p_tilde] out;

for (i in 1:3) out[i] = rep_matrix(0, p_tilde, p_tilde);

if (Nx > 0) {

for (i in 1:nr) {

cov_w += tcrossprod(to_matrix(YXstar[i, Xvar[1:Nx]] - mean_vecs[1, 1:Nx]));

}

cov_w *= pow(nclus[1], -1);

cov_w_inv[1:Nx, 1:Nx] = inverse_spd(cov_w);

out[2, 1:Nx, 1:Nx] = cov_w;

out[3, 1:Nx, 1:Nx] = cov_w_inv;

out[3, Nx + 1, Nx + 1] = log_determinant(cov_w); // need log_determinant for multi_normal_suff

}

if (Nx_between > 0) {

for (cc in 1:nclus[2]) {

cov_mean_d += tcrossprod(to_matrix(mean_d[cc, 1:Nx_between] - mean_vecs[2, 1:Nx_between]));

}

cov_mean_d *= pow(nclus[2], -1);

out[1, 1:Nx_between, 1:Nx_between] = cov_mean_d;

}

return out;

}

// compute log_lik of fixed.x variables for a single group (two-level models)

vector calc_log_lik_x(array[] vector mean_d, vector ov_mean_d, matrix cov_mean_d, matrix cov_w, matrix cov_w_inv, array[] int nclus, array[] int cluster_size, array[] int Xvar, array[] int Xbetvar, int Nx, int Nx_between) {

vector[nclus[2]] out = rep_vector(0, nclus[2]);

for (cc in 1:nclus[2]) {

if (Nx > 0) {

out[cc] += multi_normal_suff(mean_d[cc, Xvar[1:Nx]], cov_w[1:Nx, 1:Nx], mean_d[cc, Xvar[1:Nx]], cov_w_inv[1:(Nx + 1), 1:(Nx + 1)], cluster_size[cc]);

}

if (Nx_between > 0) {

out[cc] += multi_normal_lpdf(mean_d[cc, 1:Nx_between] | ov_mean_d[1:Nx_between], cov_mean_d[1:Nx_between, 1:Nx_between]);

}

}

return out;

}

// fill covariance matrix with blocks

array[] matrix fill_cov(array[] matrix covmat, array[,] int blkse, array[] int nblk,

array[] matrix mat_1, array[] matrix mat_2, array[] matrix mat_3,

array[] matrix mat_4, array[] matrix mat_5) {

array[dims(covmat)[1]] matrix[dims(covmat)[2], dims(covmat)[3]] out = covmat;

for (k in 1:sum(nblk)) {

int blkidx = blkse[k, 6];

int arrayidx = blkse[k, 5];

int blkgrp = blkse[k, 4];

int srow = blkse[k, 1];

int erow = blkse[k, 2];

if (arrayidx == 1) {

out[blkgrp, srow:erow, srow:erow] = mat_1[blkidx];

} else if (arrayidx == 2) {

out[blkgrp, srow:erow, srow:erow] = mat_2[blkidx];

} else if (arrayidx == 3) {

out[blkgrp, srow:erow, srow:erow] = mat_3[blkidx];

} else if (arrayidx == 4) {

out[blkgrp, srow:erow, srow:erow] = mat_4[blkidx];

} else {

out[blkgrp, srow:erow, srow:erow] = mat_5[blkidx];

}

}

return out;

}

}

data {

// see https://books.google.com/books?id=9AC-s50RjacC&lpg=PP1&dq=LISREL&pg=PA2#v=onepage&q=LISREL&f=false

int<lower=0> p; // number of manifest response variables

int<lower=0> p_c; // number of manifest level 2 variables

int<lower=0> q; // number of manifest predictors

int<lower=0> m; // number of latent endogenous variables

int<lower=0> m_c; // number of latent level 2 variables

int<lower=0> n; // number of latent exogenous variables

int<lower=1> Ng; // number of groups

int<lower=0, upper=1> missing; // are there missing values?

int<lower=0, upper=1> save_lvs; // should we save lvs?

int<lower=1> Np; // number of group-by-missing patterns combos

array[Ng] int<lower=1> N; // number of observations per group

array[Np] int<lower=1> Nobs; // number of observed variables in each missing pattern

array[Np] int<lower=0> Nordobs; // number of ordinal observed variables in each missing pattern

array[Np, p + q] int<lower=0> Obsvar; // indexing of observed variables

int<lower=1> Ntot; // number of observations across all groups

array[Np] int<lower=1> startrow; // starting row for each missing pattern

array[Np] int<lower=1,upper=Ntot> endrow; // ending row for each missing pattern

array[Np] int<lower=1,upper=Ng> grpnum; // group number for each row of data

int<lower=0,upper=1> wigind; // do any parameters have approx equality constraint ('wiggle')?

int<lower=0, upper=1> has_data; // are the raw data on y and x available?

int<lower=0, upper=1> ord; // are there any ordinal variables?

int<lower=0, upper=1> multilev; // is this a multilevel dataset?

int<lower=0> Nord; // how many ordinal variables?

array[Nord] int<lower=0> ordidx; // indexing of ordinal variables

array[Np, Nord] int<lower=0> OrdObsvar; // indexing of observed ordinal variables in YXo

int<lower=0> Noent; // how many observed entries of ordinal variables (for data augmentation)

array[p + q - Nord] int<lower=0> contidx; // indexing of continuous variables

array[Nord] int<lower=1> nlevs; // how many levels does each ordinal variable have

array[Ng, 2] int<lower=1> nclus; // number of level 1 + level 2 observations

int<lower=0> p_tilde; // total number of variables

array[Ntot] vector[multilev ? p_tilde : p + q - Nord] YX; // continuous data

array[Ntot, Nord] int YXo; // ordinal data

array[Np] int<lower=0> Nx; // number of fixed.x variables (within)

array[Np] int<lower=0> Nx_between; // number of fixed.x variables (between)

int<lower=0, upper=1> use_cov;

int<lower=0, upper=1> pri_only;

int<lower=0> emiter; // number of em iterations for saturated model in ppp (missing data only)

int<lower=0, upper=1> use_suff; // should we compute likelihood via mvn sufficient stats?

int<lower=0, upper=1> do_test; // should we do everything in generated quantities?

array[Np] vector[multilev ? p_tilde : p + q - Nord] YXbar; // sample means of continuous manifest variables

array[Np] matrix[multilev ? (p_tilde + 1) : (p + q - Nord + 1), multilev ? (p_tilde + 1) : (p + q - Nord + 1)] S; // sample covariance matrix among all continuous manifest variables NB!! multiply by (N-1) to use wishart lpdf!!

array[sum(nclus[,2])] int<lower=1> cluster_size; // number of obs per cluster

array[Ng] int<lower=1> ncluster_sizes; // number of unique cluster sizes

array[sum(ncluster_sizes)] int<lower=1> cluster_sizes; // unique cluster sizes

array[sum(ncluster_sizes)] int<lower=1> cluster_size_ns; // number of clusters of each size

array[Np, multilev ? p_tilde : p + q] int<lower=0> Xvar; // indexing of fixed.x variables (within)

array[Np, multilev ? p_tilde : p + q] int<lower=0> Xdatvar; // indexing of fixed.x in data (differs from Xvar when missing)

array[Np, multilev ? p_tilde : p + q] int<lower=0> Xbetvar; // indexing of fixed.x variables (between)

array[sum(ncluster_sizes)] vector[p_tilde] mean_d; // sample means by unique cluster size

array[sum(ncluster_sizes)] matrix[p_tilde, p_tilde] cov_d; // sample covariances by unique cluster size

array[Ng] matrix[p_tilde, p_tilde] cov_w; // observed "within" covariance matrix

array[sum(nclus[,2])] vector[p_tilde] mean_d_full; // sample means/covs by cluster, for clusterwise log-densities

array[sum(nclus[,2])] matrix[p_tilde, p_tilde] cov_d_full;

array[Ng] vector[p_tilde] xbar_w; // data estimates of within/between means/covs (for saturated logl)

array[Ng] vector[p_tilde] xbar_b;

array[Ng] matrix[p_tilde, p_tilde] cov_b;

array[Ng] real gs; // group size constant, for computation of saturated logl

int N_within; // number of within variables

int N_between; // number of between variables

int N_both; // number of variables at both levels

array[2] int N_lev; // number of observed variables at each level

array[N_within] int within_idx;

array[p_tilde] int between_idx; // between indexing, followed by within/both

array[N_lev[1]] int ov_idx1;

array[N_lev[2]] int ov_idx2;

array[N_both] int both_idx;

vector[multilev ? sum(ncluster_sizes) : Ng] log_lik_x; // ll of fixed x variables by unique cluster size

vector[multilev ? sum(nclus[,2]) : Ng] log_lik_x_full; // ll of fixed x variables by cluster

/* sparse matrix representations of skeletons of coefficient matrices,

which is not that interesting but necessary because you cannot pass

missing values into the data block of a Stan program from R */

int<lower=0> len_w1; // max number of free elements in Lambda_y per grp

array[Ng] int<lower=0> wg1; // number of free elements in Lambda_y per grp

array[Ng] vector[len_w1] w1; // values of free elements in Lambda_y

array[Ng, len_w1] int<lower=1> v1; // index of free elements in Lambda_y

array[Ng, p + 1] int<lower=1> u1; // index of free elements in Lambda_y

array[sum(wg1), 3] int<lower=0> w1skel;

array[sum(wg1), 2] int<lower=0> lam_y_sign;

int<lower=0> len_lam_y; // number of free elements minus equality constraints

array[len_lam_y] real lambda_y_mn; // prior

array[len_lam_y] real<lower=0> lambda_y_sd;

// same things but for B

int<lower=0> len_w4;

array[Ng] int<lower=0> wg4;

array[Ng] vector[len_w4] w4;

array[Ng, len_w4] int<lower=1> v4;

array[Ng, m + 1] int<lower=1> u4;

array[sum(wg4), 3] int<lower=0> w4skel;

array[sum(wg4), 3] int<lower=0> b_sign;

int<lower=0> len_b;

array[len_b] real b_mn;

array[len_b] real<lower=0> b_sd;

// same things but for diag(Theta)

int<lower=0> len_w5;

array[Ng] int<lower=0> wg5;

array[Ng] vector[len_w5] w5;

array[Ng, len_w5] int<lower=1> v5;

array[Ng, p + 1] int<lower=1> u5;

array[sum(wg5), 3] int<lower=0> w5skel;

int<lower=0> len_thet_sd;

array[len_thet_sd] real<lower=0> theta_sd_shape;

array[len_thet_sd] real<lower=0> theta_sd_rate;

int<lower=-2, upper=2> theta_pow;

// same things but for Theta_r

int<lower=0> len_w7;

array[Ng] int<lower=0> wg7;

array[Ng] vector[len_w7] w7;

array[Ng, len_w7] int<lower=1> v7;

array[Ng, p + 1] int<lower=1> u7;

array[sum(wg7), 3] int<lower=0> w7skel;

int<lower=0> len_thet_r;

array[len_thet_r] real<lower=0> theta_r_alpha;

array[len_thet_r] real<lower=0> theta_r_beta;

// same things but for Psi

int<lower=0> len_w9;

array[Ng] int<lower=0> wg9;

array[Ng] vector[len_w9] w9;

array[Ng, len_w9] int<lower=1> v9;

array[Ng, m + 1] int<lower=1> u9;

array[sum(wg9), 3] int<lower=0> w9skel;

int<lower=0> len_psi_sd;

array[len_psi_sd] real<lower=0> psi_sd_shape;

array[len_psi_sd] real<lower=0> psi_sd_rate;

int<lower=-2,upper=2> psi_pow;

// same things but for Psi_r

int<lower=0> len_w10;

array[Ng] int<lower=0> wg10;

array[Ng] vector[len_w10] w10;

array[Ng, len_w10] int<lower=1> v10;

array[Ng, m + 1] int<lower=1> u10;

array[sum(wg10), 3] int<lower=0> w10skel;

array[sum(wg10), 3] int<lower=0> psi_r_sign;

int<lower=0> len_psi_r;

array[len_psi_r] real<lower=0> psi_r_alpha;

array[len_psi_r] real<lower=0> psi_r_beta;

// for blocks within Psi_r that receive lkj

array[5] int<lower=0> nblk;

array[5] int<lower=3> psidims;

array[sum(nblk), 7] int<lower=0> blkse;

int<lower=0> len_w11;

array[Ng] int<lower=0> wg11;

array[Ng] vector[len_w11] w11;

array[Ng, len_w11] int<lower=1> v11;

array[Ng, m + 1] int<lower=1> u11;

array[sum(wg11), 3] int<lower=0> w11skel;

// same things but for Nu

int<lower=0> len_w13;

array[Ng] int<lower=0> wg13;

array[Ng] vector[len_w13] w13;

array[Ng, len_w13] int<lower=1> v13;

array[Ng, use_cov ? 1 : p + q + 1] int<lower=1> u13;

array[sum(wg13), 3] int<lower=0> w13skel;

int<lower=0> len_nu;

array[len_nu] real nu_mn;

array[len_nu] real<lower=0> nu_sd;

// same things but for Alpha

int<lower=0> len_w14;

array[Ng] int<lower=0> wg14;

array[Ng] vector[len_w14] w14;

array[Ng, len_w14] int<lower=0> v14;

array[Ng, use_cov ? 1 : m + n + 1] int<lower=1> u14;

array[sum(wg14), 3] int<lower=0> w14skel;

int<lower=0> len_alph;

array[len_alph] real alpha_mn;

array[len_alph] real<lower=0> alpha_sd;

// same things but for Tau

int<lower=0> len_w15;

array[Ng] int<lower=0> wg15;

array[Ng] vector[len_w15] w15;

array[Ng, len_w15] int<lower=0> v15;

array[Ng, sum(nlevs) - Nord + 1] int<lower=1> u15;

array[sum(wg15), 3] int<lower=0> w15skel;

int<lower=0> len_tau;

array[len_tau] real tau_mn;

array[len_tau] real<lower=0> tau_sd;

// Level 2 matrices start here!!

// Lambda

int<lower=0> len_w1_c;

array[Ng] int<lower=0> wg1_c;

array[Ng] vector[len_w1_c] w1_c;

array[Ng, len_w1_c] int<lower=1> v1_c;

array[Ng, p_c + 1] int<lower=1> u1_c;

array[sum(wg1_c), 3] int<lower=0> w1skel_c;

array[sum(wg1_c), 2] int<lower=0> lam_y_sign_c;

int<lower=0> len_lam_y_c;

array[len_lam_y_c] real lambda_y_mn_c;

array[len_lam_y_c] real<lower=0> lambda_y_sd_c;

// same things but for B

int<lower=0> len_w4_c;

array[Ng] int<lower=0> wg4_c;

array[Ng] vector[len_w4_c] w4_c;

array[Ng, len_w4_c] int<lower=1> v4_c;

array[Ng, m_c + 1] int<lower=1> u4_c;

array[sum(wg4_c), 3] int<lower=0> w4skel_c;

array[sum(wg4_c), 3] int<lower=0> b_sign_c;

int<lower=0> len_b_c;

array[len_b_c] real b_mn_c;

array[len_b_c] real<lower=0> b_sd_c;

// same things but for diag(Theta)

int<lower=0> len_w5_c;

array[Ng] int<lower=0> wg5_c;

array[Ng] vector[len_w5_c] w5_c;

array[Ng, len_w5_c] int<lower=1> v5_c;

array[Ng, p_c + 1] int<lower=1> u5_c;

array[sum(wg5_c), 3] int<lower=0> w5skel_c;

int<lower=0> len_thet_sd_c;

array[len_thet_sd_c] real<lower=0> theta_sd_shape_c;

array[len_thet_sd_c] real<lower=0> theta_sd_rate_c;

int<lower=-2, upper=2> theta_pow_c;

// same things but for Theta_r

int<lower=0> len_w7_c;

array[Ng] int<lower=0> wg7_c;

array[Ng] vector[len_w7_c] w7_c;

array[Ng, len_w7_c] int<lower=1> v7_c;

array[Ng, p_c + 1] int<lower=1> u7_c;

array[sum(wg7_c), 3] int<lower=0> w7skel_c;

int<lower=0> len_thet_r_c;

array[len_thet_r_c] real<lower=0> theta_r_alpha_c;

array[len_thet_r_c] real<lower=0> theta_r_beta_c;

// same things but for Psi

int<lower=0> len_w9_c;

array[Ng] int<lower=0> wg9_c;

array[Ng] vector[len_w9_c] w9_c;

array[Ng, len_w9_c] int<lower=1> v9_c;

array[Ng, m_c + 1] int<lower=1> u9_c;

array[sum(wg9_c), 3] int<lower=0> w9skel_c;

int<lower=0> len_psi_sd_c;

array[len_psi_sd_c] real<lower=0> psi_sd_shape_c;

array[len_psi_sd_c] real<lower=0> psi_sd_rate_c;

int<lower=-2,upper=2> psi_pow_c;

// same things but for Psi_r

int<lower=0> len_w10_c;

array[Ng] int<lower=0> wg10_c;

array[Ng] vector[len_w10_c] w10_c;

array[Ng, len_w10_c] int<lower=1> v10_c;

array[Ng, m_c + 1] int<lower=1> u10_c;

array[sum(wg10_c), 3] int<lower=0> w10skel_c;

array[sum(wg10_c), 3] int<lower=0> psi_r_sign_c;

int<lower=0> len_psi_r_c;

array[len_psi_r_c] real<lower=0> psi_r_alpha_c;

array[len_psi_r_c] real<lower=0> psi_r_beta_c;

// for blocks within Psi_r that receive lkj

array[5] int<lower=0> nblk_c;

array[5] int<lower=3> psidims_c;

array[sum(nblk_c), 7] int<lower=0> blkse_c;

int<lower=0> len_w11_c;

array[Ng] int<lower=0> wg11_c;

array[Ng] vector[len_w11_c] w11_c;

array[Ng, len_w11_c] int<lower=1> v11_c;

array[Ng, m_c + 1] int<lower=1> u11_c;

array[sum(wg11_c), 3] int<lower=0> w11skel_c;

// same things but for Nu

int<lower=0> len_w13_c;

array[Ng] int<lower=0> wg13_c;

array[Ng] vector[len_w13_c] w13_c;

array[Ng, len_w13_c] int<lower=1> v13_c;

array[Ng, p_c + 1] int<lower=1> u13_c;

array[sum(wg13_c), 3] int<lower=0> w13skel_c;

int<lower=0> len_nu_c;

array[len_nu_c] real nu_mn_c;

array[len_nu_c] real<lower=0> nu_sd_c;

// same things but for Alpha

int<lower=0> len_w14_c;

array[Ng] int<lower=0> wg14_c;

array[Ng] vector[len_w14_c] w14_c;

array[Ng, len_w14_c] int<lower=0> v14_c;

array[Ng, m_c + 1] int<lower=1> u14_c;

array[sum(wg14_c), 3] int<lower=0> w14skel_c;

int<lower=0> len_alph_c;

array[len_alph_c] real alpha_mn_c;

array[len_alph_c] real<lower=0> alpha_sd_c;

}

transformed data { // (re)construct skeleton matrices in Stan (not that interesting)

array[Ng] matrix[p, m] Lambda_y_skeleton;

array[Ng] matrix[m, m] B_skeleton;

array[Ng] matrix[p, p] Theta_skeleton;

array[Ng] matrix[p, p] Theta_r_skeleton;

array[Ng] matrix[m, m] Psi_skeleton;

array[Ng] matrix[m, m] Psi_r_skeleton;

array[Ng] matrix[m, m] Psi_r_skeleton_f;

array[Ng] matrix[p, 1] Nu_skeleton;

array[Ng] matrix[m, 1] Alpha_skeleton;

array[Ng] matrix[sum(nlevs) - Nord, 1] Tau_skeleton;

array[Np] vector[ord ? 0 : (p + q)] YXbarstar;

array[Np] matrix[ord ? 0 : (p + q), ord ? 0 : (p + q)] Sstar;

array[Ng] matrix[p_c, m_c] Lambda_y_skeleton_c;

array[Ng] matrix[m_c, m_c] B_skeleton_c;

array[Ng] matrix[p_c, p_c] Theta_skeleton_c;

array[Ng] matrix[p_c, p_c] Theta_r_skeleton_c;

array[Ng] matrix[m_c, m_c] Psi_skeleton_c;

array[Ng] matrix[m_c, m_c] Psi_r_skeleton_c;

array[Ng] matrix[m_c, m_c] Psi_r_skeleton_f_c;

array[Ng] matrix[p_c, 1] Nu_skeleton_c;

array[Ng] matrix[m_c, 1] Alpha_skeleton_c;

matrix[m, m] I = diag_matrix(rep_vector(1, m));

matrix[m_c, m_c] I_c = diag_matrix(rep_vector(1, m_c));

int Ncont = p + q - Nord;

array[max(nclus[,2]) > 1 ? max(nclus[,2]) : 0] int<lower = 0> intone;

array[Ng,2] int g_start1;

array[Ng,2] int g_start4;

array[Ng,2] int g_start5;

array[Ng,2] int g_start7;

array[Ng,2] int g_start9;

array[Ng,2] int g_start10;

array[Ng,2] int g_start13;

array[Ng,2] int g_start14;

array[Ng,2] int g_start15;

array[Ng,2] int g_start1_c;

array[Ng,2] int g_start4_c;

array[Ng,2] int g_start5_c;

array[Ng,2] int g_start7_c;

array[Ng,2] int g_start9_c;

array[Ng,2] int g_start10_c;

array[Ng,2] int g_start13_c;

array[Ng,2] int g_start14_c;

array[15] int len_free;

array[15] int pos;

array[15] int len_free_c;

array[15] int pos_c;

for (i in 1:15) {

len_free[i] = 0;

pos[i] = 1;

len_free_c[i] = 0;

pos_c[i] = 1;

}

for (g in 1:Ng) {

Lambda_y_skeleton[g] = to_dense_matrix(p, m, w1[g], v1[g,], u1[g,]);

B_skeleton[g] = to_dense_matrix(m, m, w4[g], v4[g,], u4[g,]);

Theta_skeleton[g] = to_dense_matrix(p, p, w5[g], v5[g,], u5[g,]);

Theta_r_skeleton[g] = to_dense_matrix(p, p, w7[g], v7[g,], u7[g,]);

Psi_skeleton[g] = to_dense_matrix(m, m, w9[g], v9[g,], u9[g,]);

Psi_r_skeleton[g] = to_dense_matrix(m, m, w10[g], v10[g,], u10[g,]);

Psi_r_skeleton_f[g] = to_dense_matrix(m, m, w11[g], v11[g,], u11[g,]);

if (!use_cov) {

Nu_skeleton[g] = to_dense_matrix((p + q), 1, w13[g], v13[g,], u13[g,]);

Alpha_skeleton[g] = to_dense_matrix((m + n), 1, w14[g], v14[g,], u14[g,]);

}

Tau_skeleton[g] = to_dense_matrix(sum(nlevs) - Nord, 1, w15[g], v15[g,], u15[g,]);

Lambda_y_skeleton_c[g] = to_dense_matrix(p_c, m_c, w1_c[g], v1_c[g,], u1_c[g,]);

B_skeleton_c[g] = to_dense_matrix(m_c, m_c, w4_c[g], v4_c[g,], u4_c[g,]);

Theta_skeleton_c[g] = to_dense_matrix(p_c, p_c, w5_c[g], v5_c[g,], u5_c[g,]);

Theta_r_skeleton_c[g] = to_dense_matrix(p_c, p_c, w7_c[g], v7_c[g,], u7_c[g,]);

Psi_skeleton_c[g] = to_dense_matrix(m_c, m_c, w9_c[g], v9_c[g,], u9_c[g,]);

Psi_r_skeleton_c[g] = to_dense_matrix(m_c, m_c, w10_c[g], v10_c[g,], u10_c[g,]);

Psi_r_skeleton_f_c[g] = to_dense_matrix(m_c, m_c, w11_c[g], v11_c[g,], u11_c[g,]);

Nu_skeleton_c[g] = to_dense_matrix(p_c, 1, w13_c[g], v13_c[g,], u13_c[g,]);

Alpha_skeleton_c[g] = to_dense_matrix(m_c, 1, w14_c[g], v14_c[g,], u14_c[g,]);

// count free elements in Lambda_y_skeleton

g_start1[g,1] = len_free[1] + 1;

g_start1[g,2] = pos[1];

for (i in 1:p) {

for (j in 1:m) {

if (is_inf(Lambda_y_skeleton[g,i,j])) {

if (w1skel[pos[1],2] == 0 || w1skel[pos[1],3] == 1) len_free[1] += 1;

pos[1] += 1;

}

}

}

// same thing but for B_skeleton

g_start4[g,1] = len_free[4] + 1;

g_start4[g,2] = pos[4];

for (i in 1:m) {

for (j in 1:m) {

if (is_inf(B_skeleton[g,i,j])) {

if (w4skel[pos[4],2] == 0 || w4skel[pos[4],3] == 1) len_free[4] += 1;

pos[4] += 1;

}

}

}

// same thing but for Theta_skeleton

g_start5[g,1] = len_free[5] + 1;

g_start5[g,2] = pos[5];

for (i in 1:p) {

if (is_inf(Theta_skeleton[g,i,i])) {

if (w5skel[pos[5],2] == 0 || w5skel[pos[5],3] == 1) len_free[5] += 1;

pos[5] += 1;

}

}

// same thing but for Theta_r_skeleton

g_start7[g,1] = len_free[7] + 1;

g_start7[g,2] = pos[7];

for (i in 1:(p-1)) {

for (j in (i+1):p) {

if (is_inf(Theta_r_skeleton[g,j,i])) {

if (w7skel[pos[7],2] == 0 || w7skel[pos[7],3] == 1) len_free[7] += 1;

pos[7] += 1;

}

}

}

// same thing but for Psi_skeleton

g_start9[g,1] = len_free[9] + 1;

g_start9[g,2] = pos[9];

for (i in 1:m) {

if (is_inf(Psi_skeleton[g,i,i])) {

if (w9skel[pos[9],2] == 0 || w9skel[pos[9],3] == 1) len_free[9] += 1;

pos[9] += 1;

}

}

// same thing but for Psi_r_skeleton

g_start10[g,1] = len_free[10] + 1;

g_start10[g,2] = pos[10];

for (i in 1:(m-1)) {

for (j in (i+1):m) {

if (is_inf(Psi_r_skeleton[g,j,i])) {

if (w10skel[pos[10],2] == 0 || w10skel[pos[10],3] == 1) len_free[10] += 1;

pos[10] += 1;

}

if (is_inf(Psi_r_skeleton_f[g,j,i])) {

if (w11skel[pos[11],2] == 0 || w11skel[pos[11],3] == 1) len_free[11] += 1;

pos[11] += 1;

}

}

}

if (!use_cov) {

// same thing but for Nu_skeleton

// pos = len_free13 + 1;

g_start13[g,1] = len_free[13] + 1;

g_start13[g,2] = pos[13];

for (i in 1:(p+q)) {

if (is_inf(Nu_skeleton[g,i,1])) {

if (w13skel[pos[13],2] == 0 || w13skel[pos[13],3] == 1) len_free[13] += 1;

pos[13] += 1;

}

}

// same thing but for Alpha_skeleton

g_start14[g,1] = len_free[14] + 1;

g_start14[g,2] = pos[14];

for (i in 1:(m+n)) {

if (is_inf(Alpha_skeleton[g,i,1])) {

if (w14skel[pos[14],2] == 0 || w14skel[pos[14],3] == 1) len_free[14] += 1;

pos[14] += 1;

}

}

}

// same thing but for Tau_skeleton

g_start15[g,1] = len_free[15] + 1;

g_start15[g,2] = pos[15];

for (i in 1:(sum(nlevs) - Nord)) {

if (is_inf(Tau_skeleton[g,i,1])) {

if (w15skel[pos[15],2] == 0 || w15skel[pos[15],3] == 1) len_free[15] += 1;

pos[15] += 1;

}

}

// now level 2

// count free elements in Lambda_y_skeleton

g_start1_c[g,1] = len_free_c[1] + 1;

g_start1_c[g,2] = pos_c[1];

for (i in 1:p_c) {

for (j in 1:m_c) {

if (is_inf(Lambda_y_skeleton_c[g,i,j])) {

if (w1skel_c[pos_c[1],2] == 0 || w1skel_c[pos_c[1],3] == 1) len_free_c[1] += 1;

pos_c[1] += 1;

}

}

}

// same thing but for B_skeleton

g_start4_c[g,1] = len_free_c[4] + 1;

g_start4_c[g,2] = pos_c[4];

for (i in 1:m_c) {

for (j in 1:m_c) {

if (is_inf(B_skeleton_c[g,i,j])) {

if (w4skel_c[pos_c[4],2] == 0 || w4skel_c[pos_c[4],3] == 1) len_free_c[4] += 1;

pos_c[4] += 1;

}

}

}

// same thing but for Theta_skeleton

g_start5_c[g,1] = len_free_c[5] + 1;

g_start5_c[g,2] = pos_c[5];

for (i in 1:p_c) {

if (is_inf(Theta_skeleton_c[g,i,i])) {

if (w5skel_c[pos_c[5],2] == 0 || w5skel_c[pos_c[5],3] == 1) len_free_c[5] += 1;

pos_c[5] += 1;

}

}

// same thing but for Theta_r_skeleton

g_start7_c[g,1] = len_free_c[7] + 1;

g_start7_c[g,2] = pos_c[7];

for (i in 1:(p_c-1)) {

for (j in (i+1):p_c) {

if (is_inf(Theta_r_skeleton_c[g,j,i])) {

if (w7skel_c[pos_c[7],2] == 0 || w7skel_c[pos_c[7],3] == 1) len_free_c[7] += 1;

pos_c[7] += 1;

}

}

}

// same thing but for Psi_skeleton

g_start9_c[g,1] = len_free_c[9] + 1;

g_start9_c[g,2] = pos_c[9];

for (i in 1:m_c) {

if (is_inf(Psi_skeleton_c[g,i,i])) {

if (w9skel_c[pos_c[9],2] == 0 || w9skel_c[pos_c[9],3] == 1) len_free_c[9] += 1;

pos_c[9] += 1;

}

}

// same thing but for Psi_r_skeleton

g_start10_c[g,1] = len_free_c[10] + 1;

g_start10_c[g,2] = pos_c[10];

for (i in 1:(m_c-1)) {

for (j in (i+1):m_c) {

if (is_inf(Psi_r_skeleton_c[g,j,i])) {

if (w10skel_c[pos_c[10],2] == 0 || w10skel_c[pos_c[10],3] == 1) len_free_c[10] += 1;

pos_c[10] += 1;

}

if (is_inf(Psi_r_skeleton_f_c[g,j,i])) {

if (w11skel_c[pos_c[11],2] == 0 || w11skel_c[pos_c[11],3] == 1) len_free_c[11] += 1;

pos_c[11] += 1;

}

}

}

// same thing but for Nu_skeleton

// pos = len_free13 + 1;

g_start13_c[g,1] = len_free_c[13] + 1;

g_start13_c[g,2] = pos_c[13];

for (i in 1:p_c) {

if (is_inf(Nu_skeleton_c[g,i,1])) {

if (w13skel_c[pos_c[13],2] == 0 || w13skel_c[pos_c[13],3] == 1) len_free_c[13] += 1;

pos_c[13] += 1;

}

}

// same thing but for Alpha_skeleton

g_start14_c[g,1] = len_free_c[14] + 1;

g_start14_c[g,2] = pos_c[14];

for (i in 1:m_c) {

if (is_inf(Alpha_skeleton_c[g,i,1])) {

if (w14skel_c[pos_c[14],2] == 0 || w14skel_c[pos_c[14],3] == 1) len_free_c[14] += 1;

pos_c[14] += 1;

}

}

}

// for clusterwise loglik computations

if (max(nclus[,2]) > 1) for (i in 1:max(nclus[,2])) intone[i] = 1;

if (!ord && (use_suff || use_cov)) {

// sufficient stat matrices by pattern, moved to left for missing

for (patt in 1:Np) {

Sstar[patt] = rep_matrix(0, p + q, p + q);

Sstar[patt, 1:Nobs[patt], 1:Nobs[patt]] = S[patt, Obsvar[patt, 1:Nobs[patt]], Obsvar[patt, 1:Nobs[patt]]];

for (j in 1:Nobs[patt]) {

YXbarstar[patt,j] = YXbar[patt, Obsvar[patt,j]];

}

}

}

}

parameters {

// free elements (possibly with inequality constraints) for coefficient matrices

vector[len_free[1]] Lambda_y_free;

vector[len_free[4]] B_free;

vector<lower=0>[len_free[5]] Theta_sd_free;

vector<lower=-1,upper=1>[len_free[7]] Theta_r_free; // to use beta prior

vector<lower=0>[len_free[9]] Psi_sd_free;

array[nblk[1]] corr_matrix[psidims[1]] Psi_r_mat_1;

array[nblk[2]] corr_matrix[psidims[2]] Psi_r_mat_2;

array[nblk[3]] corr_matrix[psidims[3]] Psi_r_mat_3;

array[nblk[4]] corr_matrix[psidims[4]] Psi_r_mat_4;

array[nblk[5]] corr_matrix[psidims[5]] Psi_r_mat_5;

vector<lower=-1,upper=1>[len_free[10]] Psi_r_free;

vector[len_free[13]] Nu_free;

vector[len_free[14]] Alpha_free;

vector[len_free[15]] Tau_ufree;

vector<lower=0,upper=1>[Noent] z_aug; //augmented ordinal data

vector[len_free_c[1]] Lambda_y_free_c;

vector[len_free_c[4]] B_free_c;

vector<lower=0>[len_free_c[5]] Theta_sd_free_c;

vector<lower=-1,upper=1>[len_free_c[7]] Theta_r_free_c; // to use beta prior

vector<lower=0>[len_free_c[9]] Psi_sd_free_c;

array[nblk_c[1]] corr_matrix[psidims_c[1]] Psi_r_mat_1_c;

array[nblk_c[2]] corr_matrix[psidims_c[2]] Psi_r_mat_2_c;

array[nblk_c[3]] corr_matrix[psidims_c[3]] Psi_r_mat_3_c;

array[nblk_c[4]] corr_matrix[psidims_c[4]] Psi_r_mat_4_c;

array[nblk_c[5]] corr_matrix[psidims_c[5]] Psi_r_mat_5_c;

vector<lower=-1,upper=1>[len_free_c[10]] Psi_r_free_c;

vector[len_free_c[13]] Nu_free_c;

vector[len_free_c[14]] Alpha_free_c;

}

transformed parameters {

array[Ng] matrix[p, m] Lambda_y;

array[Ng] matrix[m, m] B;

array[Ng] matrix[p, p] Theta_sd;

array[Ng] matrix[p, p] T_r_lower;

array[Ng] matrix[p, p] Theta_r;

array[Ng] matrix[p + q, 1] Nu;

array[Ng] matrix[m + n, 1] Alpha;

array[Ng] matrix[p_c, m_c] Lambda_y_c;

array[Ng] matrix[m_c, m_c] B_c;

array[Ng] matrix[p_c, p_c] Theta_sd_c;

array[Ng] matrix[p_c, p_c] T_r_lower_c;

array[Ng] matrix[p_c, p_c] Theta_r_c;

array[Ng] matrix[p_c, 1] Nu_c;

array[Ng] matrix[m_c, 1] Alpha_c;

array[Ng] matrix[sum(nlevs) - Nord, 1] Tau_un;

array[Ng] matrix[sum(nlevs) - Nord, 1] Tau;

vector[len_free[15]] Tau_free;

real tau_jacobian;

array[Ng] matrix[m, m] Psi;

array[Ng] matrix[m, m] Psi_sd;

array[Ng] matrix[m, m] Psi_r_lower;

array[Ng] matrix[m, m] Psi_r;

array[Ng] matrix[m_c, m_c] Psi_c;

array[Ng] matrix[m_c, m_c] Psi_sd_c;

array[Ng] matrix[m_c, m_c] Psi_r_lower_c;

array[Ng] matrix[m_c, m_c] Psi_r_c;

vector[len_free[1]] lambda_y_primn;

vector[len_free[4]] b_primn;

vector[len_free[13]] nu_primn;

vector[len_free[14]] alpha_primn;

vector[len_free[15]] tau_primn;

vector[len_free_c[1]] lambda_y_primn_c;

vector[len_free_c[4]] b_primn_c;

vector[len_free_c[13]] nu_primn_c;

vector[len_free_c[14]] alpha_primn_c;

array[Ng] matrix[p, m] Lambda_y_A; // = Lambda_y * (I - B)^{-1}

array[Ng] matrix[p_c, m_c] Lambda_y_A_c;

array[Ng] vector[p + q] Mu;

array[Ng] matrix[p + q, p + q] Sigma; // model covariance matrix

array[Ng] matrix[p + q, p + q] Sigmainv_grp; // model covariance matrix

array[Ng] real logdetSigma_grp;

array[Np] matrix[p + q + 1, p + q + 1] Sigmainv; // for updating S^-1 by missing data pattern

array[Ng] vector[p_c] Mu_c;

array[Ng] matrix[p_c, p_c] Sigma_c; // level 2 model covariance matrix

array[Ng] matrix[N_both + N_within, N_both + N_within] S_PW;

array[Ntot] vector[p + q] YXstar;

array[Ntot] vector[Nord] YXostar; // ordinal data

for (g in 1:Ng) {

// model matrices

Lambda_y[g] = fill_matrix(Lambda_y_free, Lambda_y_skeleton[g], w1skel, g_start1[g,1], g_start1[g,2]);

B[g] = fill_matrix(B_free, B_skeleton[g], w4skel, g_start4[g,1], g_start4[g,2]);

Theta_sd[g] = fill_matrix(Theta_sd_free, Theta_skeleton[g], w5skel, g_start5[g,1], g_start5[g,2]);

T_r_lower[g] = fill_matrix(Theta_r_free, Theta_r_skeleton[g], w7skel, g_start7[g,1], g_start7[g,2]);

Theta_r[g] = T_r_lower[g] + transpose(T_r_lower[g]) - diag_matrix(rep_vector(1, p));

if (!use_cov) {

Nu[g] = fill_matrix(Nu_free, Nu_skeleton[g], w13skel, g_start13[g,1], g_start13[g,2]);

Alpha[g] = fill_matrix(Alpha_free, Alpha_skeleton[g], w14skel, g_start14[g,1], g_start14[g,2]);

}

Psi[g] = diag_matrix(rep_vector(0, m));

if (m > 0) {

Psi_sd[g] = fill_matrix(Psi_sd_free, Psi_skeleton[g], w9skel, g_start9[g,1], g_start9[g,2]);

Psi_r_lower[g] = fill_matrix(Psi_r_free, Psi_r_skeleton[g], w10skel, g_start10[g,1], g_start10[g,2]);

Psi_r[g] = Psi_r_lower[g] + transpose(Psi_r_lower[g]) - diag_matrix(rep_vector(1, m));

}

// level 2 matrices

Lambda_y_c[g] = fill_matrix(Lambda_y_free_c, Lambda_y_skeleton_c[g], w1skel_c, g_start1_c[g,1], g_start1_c[g,2]);

B_c[g] = fill_matrix(B_free_c, B_skeleton_c[g], w4skel_c, g_start4_c[g,1], g_start4_c[g,2]);

Theta_sd_c[g] = fill_matrix(Theta_sd_free_c, Theta_skeleton_c[g], w5skel_c, g_start5_c[g,1], g_start5_c[g,2]);

T_r_lower_c[g] = fill_matrix(Theta_r_free_c, Theta_r_skeleton_c[g], w7skel_c, g_start7_c[g,1], g_start7_c[g,2]);

Theta_r_c[g] = T_r_lower_c[g] + transpose(T_r_lower_c[g]) - diag_matrix(rep_vector(1, p_c));

Nu_c[g] = fill_matrix(Nu_free_c, Nu_skeleton_c[g], w13skel_c, g_start13_c[g,1], g_start13_c[g,2]);

Alpha_c[g] = fill_matrix(Alpha_free_c, Alpha_skeleton_c[g], w14skel_c, g_start14_c[g,1], g_start14_c[g,2]);

Psi_c[g] = diag_matrix(rep_vector(0, m_c));

if (m_c > 0) {

Psi_sd_c[g] = fill_matrix(Psi_sd_free_c, Psi_skeleton_c[g], w9skel_c, g_start9_c[g,1], g_start9_c[g,2]);

Psi_r_lower_c[g] = fill_matrix(Psi_r_free_c, Psi_r_skeleton_c[g], w10skel_c, g_start10_c[g,1], g_start10_c[g,2]);

Psi_r_c[g] = Psi_r_lower_c[g] + transpose(Psi_r_lower_c[g]) - diag_matrix(rep_vector(1, m_c));

}

}

if (sum(nblk) > 0) {

// we need to define a separate parameter for each dimension of correlation matrix,

// so we need all these Psi_r_mats

Psi_r = fill_cov(Psi_r, blkse, nblk, Psi_r_mat_1, Psi_r_mat_2, Psi_r_mat_3, Psi_r_mat_4, Psi_r_mat_5);

}

if (sum(nblk_c) > 0) {

Psi_r_c = fill_cov(Psi_r_c, blkse_c, nblk_c, Psi_r_mat_1_c, Psi_r_mat_2_c, Psi_r_mat_3_c, Psi_r_mat_4_c, Psi_r_mat_5_c);

}

// see https://books.google.com/books?id=9AC-s50RjacC&lpg=PP1&dq=LISREL&pg=PA3#v=onepage&q=LISREL&f=false

for (g in 1:Ng) {

if (m > 0) {

Lambda_y_A[g] = mdivide_right(Lambda_y[g], I - B[g]); // = Lambda_y * (I - B)^{-1}

Psi[g] = quad_form_sym(Psi_r[g], Psi_sd[g]);

}

if (!use_cov) {

Mu[g] = to_vector(Nu[g]);

} else if(has_data) {

Mu[g] = YXbar[g]; // doesn't enter in likelihood, just for lppd + loo

}

if (p > 0) {

Sigma[g, 1:p, 1:p] = quad_form_sym(Theta_r[g], Theta_sd[g]);

if (m > 0) {

Sigma[g, 1:p, 1:p] += quad_form_sym(Psi[g], transpose(Lambda_y_A[g]));

if (!use_cov) Mu[g, 1:p] += to_vector(Lambda_y_A[g] * Alpha[g, 1:m, 1]);

}

}

if (m_c > 0) {

Lambda_y_A_c[g] = mdivide_right(Lambda_y_c[g], I_c - B_c[g]);

Psi_c[g] = quad_form_sym(Psi_r_c[g], Psi_sd_c[g]);

}

Mu_c[g] = to_vector(Nu_c[g]);

if (p_c > 0) {

Sigma_c[g, 1:p_c, 1:p_c] = quad_form_sym(Theta_r_c[g], Theta_sd_c[g]);

if (m_c > 0) {

Sigma_c[g, 1:p_c, 1:p_c] += quad_form_sym(Psi_c[g], transpose(Lambda_y_A_c[g]));

Mu_c[g, 1:p_c] += to_vector(Lambda_y_A_c[g] * Alpha_c[g, 1:m_c, 1]);

}

}

if (nclus[g,2] > 1) {

// remove between variables, for likelihood computations

S_PW[g] = cov_w[g, between_idx[(N_between + 1):p_tilde], between_idx[(N_between + 1):p_tilde]];

}

}

// obtain ordered thresholds; NB untouched for two-level models

if (ord) {

int opos = 1;

int ofreepos = 1;

tau_jacobian = 0;

for (g in 1:Ng) {

int vecpos = 1;

Tau_un[g] = fill_matrix(Tau_ufree, Tau_skeleton[g], w15skel, g_start15[g,1], g_start15[g,2]);

for (i in 1:Nord) {

for (j in 1:(nlevs[i] - 1)) {

real rc = Tau_skeleton[g, vecpos, 1];

int eq = w15skel[opos, 1];

int wig = w15skel[opos, 3];

if (is_inf(rc)) {

if (eq == 0 || wig == 1) {

if (j == 1) {

Tau[g, vecpos, 1] = Tau_un[g, vecpos, 1];

} else {

Tau[g, vecpos, 1] = Tau[g, (vecpos - 1), 1] + exp(Tau_un[g, vecpos, 1]);

}

Tau_free[ofreepos] = Tau[g, vecpos, 1];

// this is used if a prior goes on Tau_free, instead of Tau_ufree:

//if (j > 1) {

// tau_jacobian += Tau_un[g, vecpos, 1]; // see https://mc-stan.org/docs/2_24/reference-manual/ordered-vector.html

// }

ofreepos += 1;

} else if (eq == 1) {

int eqent = w15skel[opos, 2];

Tau[g, vecpos, 1] = Tau_free[eqent];

}

opos += 1;

} else {

// fixed value

Tau[g, vecpos, 1] = Tau_un[g, vecpos, 1];

}

vecpos += 1;

}

}

}

}

// prior vectors

if (wigind) {

lambda_y_primn = fill_prior(Lambda_y_free, lambda_y_mn, w1skel);

b_primn = fill_prior(B_free, b_mn, w4skel);

nu_primn = fill_prior(Nu_free, nu_mn, w13skel);

alpha_primn = fill_prior(Alpha_free, alpha_mn, w14skel);

tau_primn = fill_prior(Tau_ufree, tau_mn, w15skel);

lambda_y_primn_c = fill_prior(Lambda_y_free_c, lambda_y_mn_c, w1skel_c);

b_primn_c = fill_prior(B_free_c, b_mn_c, w4skel_c);

nu_primn_c = fill_prior(Nu_free_c, nu_mn_c, w13skel_c);

alpha_primn_c = fill_prior(Alpha_free_c, alpha_mn_c, w14skel_c);

} else {

lambda_y_primn = to_vector(lambda_y_mn);

b_primn = to_vector(b_mn);

nu_primn = to_vector(nu_mn);

alpha_primn = to_vector(alpha_mn);

tau_primn = to_vector(tau_mn);

lambda_y_primn_c = to_vector(lambda_y_mn_c);

b_primn_c = to_vector(b_mn_c);

nu_primn_c = to_vector(nu_mn_c);

alpha_primn_c = to_vector(alpha_mn_c);

}

// NB nothing below this will be used for two level, because we need other tricks to

// compute the likelihood

// continuous responses underlying ordinal data

if (ord) {

int idxvec = 0;

for (patt in 1:Np) {

for (i in startrow[patt]:endrow[patt]) {

for (j in 1:Nordobs[patt]) {

int obspos = OrdObsvar[patt,j];

int vecpos = YXo[i,obspos] - 1;

idxvec += 1;

if (obspos > 1) vecpos += sum(nlevs[1:(obspos - 1)]) - (obspos - 1);

if (YXo[i,obspos] == 1) {

YXostar[i,obspos] = -10 + (Tau[grpnum[patt], (vecpos + 1), 1] + 10) .* z_aug[idxvec];

tau_jacobian += log(abs(Tau[grpnum[patt], (vecpos + 1), 1] + 10)); // must add log(U) to tau_jacobian

} else if (YXo[i,obspos] == nlevs[obspos]) {

YXostar[i,obspos] = Tau[grpnum[patt], vecpos, 1] + (10 - Tau[grpnum[patt], vecpos, 1]) .* z_aug[idxvec];

tau_jacobian += log(abs(10 - Tau[grpnum[patt], vecpos, 1]));

} else {

YXostar[i,obspos] = Tau[grpnum[patt], vecpos, 1] + (Tau[grpnum[patt], (vecpos + 1), 1] - Tau[grpnum[patt], vecpos, 1]) .* z_aug[idxvec];

tau_jacobian += Tau_un[grpnum[patt], (vecpos + 1), 1]; // jacobian is log(exp(Tau_un))

}

YXstar[i, ordidx[obspos]] = YXostar[i, obspos];

}

}

}

}

if (Ncont > 0) {

for (patt in 1:Np) {

for (i in startrow[patt]:endrow[patt]) {

for (j in 1:Ncont) {

YXstar[i, contidx[j]] = YX[i,j];

}

}

}

}

// move observations to the left

if (missing) {

for (patt in 1:Np) {

for (i in startrow[patt]:endrow[patt]) {