Today’s Class

- Introduction to Generalized Linear Models

- Expanding your linear models knowledge to models for outcomes that are not conditionally normally distributed

- An example of generalized models for binary data using logistic regression

- Matrix Algebra

An Introduction to Generalized Linear Models

Categories of Multivariate Models

Statistical models can be broadly organized as:

- General (normal outcome) vs. Generalized (not normal outcome)

- One dimension of sampling (one variance term per outcome) vs. multiple dimensions of sampling (multiple variance terms)

- Fixed effects only vs. Mixed effects (fixed and random effects = multilevel)

All models have fixed effects, and then:

- General Linear Models (GLM): conditionally normal distribution of data, fixed effects and no random effects

- General Linear Mixed Models (GLMM): conditionally normal distribution for data, fixed and random effects

- Generalized Linear Models: any conditional distribution for data, fixed effects through link functions, no random effects

- Generalized Linear Mixed Models: any conditional distribution for data, fixed effects through link functions, fixed and random effects

“Linear” means the fixed effects predict the link-transformed DV in a linear combination of

Unpacking the Big Picture

Substantive theory: what guides your study

Hypothetical causal process: what the statistical model is testing (attempting to falsify) when estimated

Observed outcomes: what you collect and evaluate based on your theory

Outcomes can take many forms:

Continuous variables (e.g., time, blood pressure, height)

Categorical variables (e.g., Likert-type response, ordered categories, nominal categories)

Combinations of continuous and categorical (e.g., either 0 or some other continuous number)

The Goal of Generalized Models

Generalized models connect a substantive theory to the sample space (the range of possible values) of the observed outcomes

- Sample space = type/range/outcomes that are possible

The core idea is that a statistical model will not approximate the outcome well if its assumed distribution is a poor fit for the outcome’s sample space.

- If model does not fit the outcome, the findings cannot be trusted

The key to making all of this work is the use of differing statistical distributions for the outcome

Generalized models allow for different distributions for outcomes

- The mean of the distribution is still modeled by the model for the means (the fixed effects)

- The variance of the distribution may or may not be modeled (some distributions don’t have variance terms)

What kind of outcome? Generalized vs. General

Generalized Linear Models are an extension of General Linear Models for outcomes with non-normal residuals. They predict a transformed Y (via a link function) instead of Y on its original scale

Many kinds of non-normally distributed outcomes have some kind of generalized linear model to go with them:

- Binary (dichotomous)

- Unordered categorical (nominal)

- Ordered categorical (ordinal)

- Counts (discrete, positive values)

- Censored (piled up and cut off at one end – left or right)

- Zero-inflated (pile of 0’s, then some distribution after)

- Continuous but skewed data (pile on one end, long tail)

Common distributions and canonical link functions (from Wikipedia)

| Distribution | Support of distribution | Typical uses | Link name | Link function | Mean function |

|---|---|---|---|---|---|

| Normal | real: | Linear-response data | Identity | ||

| Exponential | real: | Exponential-response data, scale parameters | Negative inverse | ||

| Gamma | |||||

| Inverse Gaussian |

real: | Inverse squared |

|||

| Poisson | integer: | count of occurrences in fixed amount of time/space | Log | ||

| Bernoulli | integer: | outcome of single yes/no occurrence | Logit | ||

| Binomial | integer: | count of # of "yes" occurrences out of N yes/no occurrences | |||

| Categorical | integer: | outcome of single K-way occurrence | |||

| K-vector of integer: , where exactly one element in the vector has the value 1 | |||||

| Multinomial | K-vector of integer: | count of occurrences of different types (1, ..., K) out of N total K-way occurrences |

Three Parts of a Generalized Linear Model

Link Function (main difference from GLM):

How a non-normal outcome gets transformed into something we can predict that is more continuous (unbounded)

For outcomes that are already normal, general linear models are just a special case with an “identity” link function (Y * 1)

Model for the Means (“Structural Model”):

How predictors are linearly related to the link-transformed outcome

- Or predictor non-linearly related to the original scale of outcome

New link-transformed

Model for the Variance (“Sampling/Stochastic Model”):

- If the errors aren’t normally distributed, then what are they?

- Family of alternative distributions at our disposal that map onto what the distribution of errors could possibly look like

- In logistic regression, you often hear sayings like “no error term exists” or “the error term has a binomial distribution” (more accurately, the variance is determined by the mean, following a binomial distribution).

Link Functions: How Generalized Models Work

Generalized models work by providing a mapping of the theoretical portion of the model (the right hand side of the equation) to the sample space of the outcome (the left hand side of the equation)

- The mapping is done by a feature called a link function

The link function is a non-linear function that takes the linear model predictors, random/latent terms, and constants and puts them onto the space of the outcome observed variables

Link functions are typically expressed for the mean of the outcome variable (we will only focus on that)

- In generalized models, the error variance is often a function of the mean, so no additive information exists if estimating the error term

Link Functions in Practice

- The link function expresses the conditional value of the mean of the outcome

where stands for expectation.

… through a non-linear link function when used on conditional mean of outcome

or its inverse link function when used on linear combination of predictors

The general form is:

The red part is the linear combination of predictors and their effects.

Why normal GLM is one type of Generalized Linear Model

- Our familiar general linear model is actually a member of the generalized model family (it is subsumed)

- The link function is called the identity, the linear predictor is unchanged

- The normal distribution has two parameters, a mean and a variance

- Unlike most distributions, the normal distribution parameters are directly modeled by the GLM

- In conditionally normal GLMs, the inverse link function is called the identity function:

The identity function does not alter the predicted values – they can be any real number

This matches the sample space of the normal distribution – the mean can be any real number

- The expected value of an outcome from the GLM is:

About the Variance of GLM

The other parameter of the normal distribution described the variance of an outcome – called the error (residual) variance

We found that the model for the variance for the GLM was:

- Similarly, this term directly relates to the variance of the outcome in the normal distribution

- We will quickly see distributions from other families (i.e., logistic ) where this doesn’t happen

- Error terms are independent of predictors

Generalized Linear Models For Binary Data

Today’s Data Example

- To help demonstrate generalized models for binary data, we borrow from an example listed on the UCLA ATS website.

- The data is available after updating the

ESRM64503package - Data come from a survey of 400 college juniors looking at factors that influence the decision to apply to graduate school:

- Y (outcome): student rating of likelihood he/she will apply to grad school (0 = unlikely; 1 = somewhat likely; 2 = very likely)

- We will first look at Y for two categories (0 = unlikely; 1 = somewhat or very likely) – we merged the ‘somewhat likely’ and ‘very likely’ categories for this illustration.

- In practice, you would typically use a model designed for three or more ordered categories (like an ordinal logistic regression).

- ParentEd: indicator (0/1) if one or more parent has graduate degree

- Public: indicator (0/1) if student attends a public university

- GPA: grade point average on 4 point scale (4.0 = perfect)

- Y (outcome): student rating of likelihood he/she will apply to grad school (0 = unlikely; 1 = somewhat likely; 2 = very likely)

Descriptive Statistics for Data

⌘+C

library(ESRM64503)

library(tidyverse)

library(kableExtra)

Desp_GPA <- dataLogit |>

summarise(

Variable = "GPA",

N = n(),

Mean = mean(GPA),

`Std Dev` = sd(GPA),

Minimum = min(GPA),

Maximum = max(GPA)

)

Desp_Apply <- dataLogit |>

group_by(APPLY) |>

summarise(

Frequency = n(),

Percent = n() / nrow(dataLogit) * 100

) |>

ungroup() |>

mutate(

`Cumulative Frequency` = cumsum(Frequency),

`Cumulative Percent` = cumsum(Percent)

)

Desp_LLApply <- dataLogit |>

group_by(LLAPPLY) |>

summarise(

Frequency = n(),

Percent = n() / nrow(dataLogit) * 100

) |>

ungroup() |>

mutate(

`Cumulative Frequency` = cumsum(Frequency),

`Cumulative Percent` = cumsum(Percent)

)

Desp_PARED <- dataLogit |>

group_by(PARED) |>

summarise(

Frequency = n(),

Percent = n() / nrow(dataLogit) * 100

) |>

ungroup() |>

mutate(

`Cumulative Frequency` = cumsum(Frequency),

`Cumulative Percent` = cumsum(Percent)

)

Desp_PUBLIC <- dataLogit |>

group_by(PUBLIC) |>

summarise(

Frequency = n(),

Percent = n() / nrow(dataLogit) * 100

) |>

ungroup() |>

mutate(

`Cumulative Frequency` = cumsum(Frequency),

`Cumulative Percent` = cumsum(Percent)

)show_table(Desp_Apply) # Likelihood of Applying (1 = somewhat likely; 2 = very likely)| APPLY | Frequency | Percent | Cumulative Frequency | Cumulative Percent |

|---|---|---|---|---|

| 0 | 220 | 55 | 220 | 55 |

| 1 | 140 | 35 | 360 | 90 |

| 2 | 40 | 10 | 400 | 100 |

show_table(Desp_LLApply) # Likelihood of Applying (1 = likely)| LLAPPLY | Frequency | Percent | Cumulative Frequency | Cumulative Percent |

|---|---|---|---|---|

| 0 | 220 | 55 | 220 | 55 |

| 1 | 180 | 45 | 400 | 100 |

show_table(Desp_GPA) # Analysis Variable : GPA| Variable | N | Mean | Std Dev | Minimum | Maximum |

|---|---|---|---|---|---|

| GPA | 400 | 2.998925 | 0.3979409 | 1.9 | 4 |

show_table(Desp_PARED) # Parent Has Graduate Degree| PARED | Frequency | Percent | Cumulative Frequency | Cumulative Percent |

|---|---|---|---|---|

| 0 | 337 | 84.25 | 337 | 84.25 |

| 1 | 63 | 15.75 | 400 | 100.00 |

show_table(Desp_PUBLIC) # Student Attends Public University| PUBLIC | Frequency | Percent | Cumulative Frequency | Cumulative Percent |

|---|---|---|---|---|

| 0 | 343 | 85.75 | 343 | 85.75 |

| 1 | 57 | 14.25 | 400 | 100.00 |

What If We Used a Normal GLM for Binary Outcomes?

- If is a binary (0 or 1) outcome

- Expected mean is proportion of people who have a 1 (or “p”, the probability of Y_p = 1 in the sample)

- The probability of having a 1 is what we’re trying to predict for each person, given the values of his/her predictors

- General linear model:

- = expected probability when all predictors are 0

- = expected change in probability for a one-unit change in the predictor

- = difference between observed and predicted values

- Generalized Linear Model becomes

A General Linear Model of Predicting Binary Outcomes?

But if is binary and link function is identity link, then can only be 2 things:

=

If then = (0 - predicted probability)

If then = (1 - predicted probability)

The mean of errors would still be 0 … by definition

But variance of errors can’t possibly be constant over levels of X like we assume in general linear models

The mean and variance of a binary outcome are dependent!

As shown shortly, mean = p and variance = p * (1 - p), so they are tied together

This means that because the conditional mean of Y (p, the predicted probability Y = 1) is dependent on X, then so is the error variance, violating the assumption of homoscedasticity.

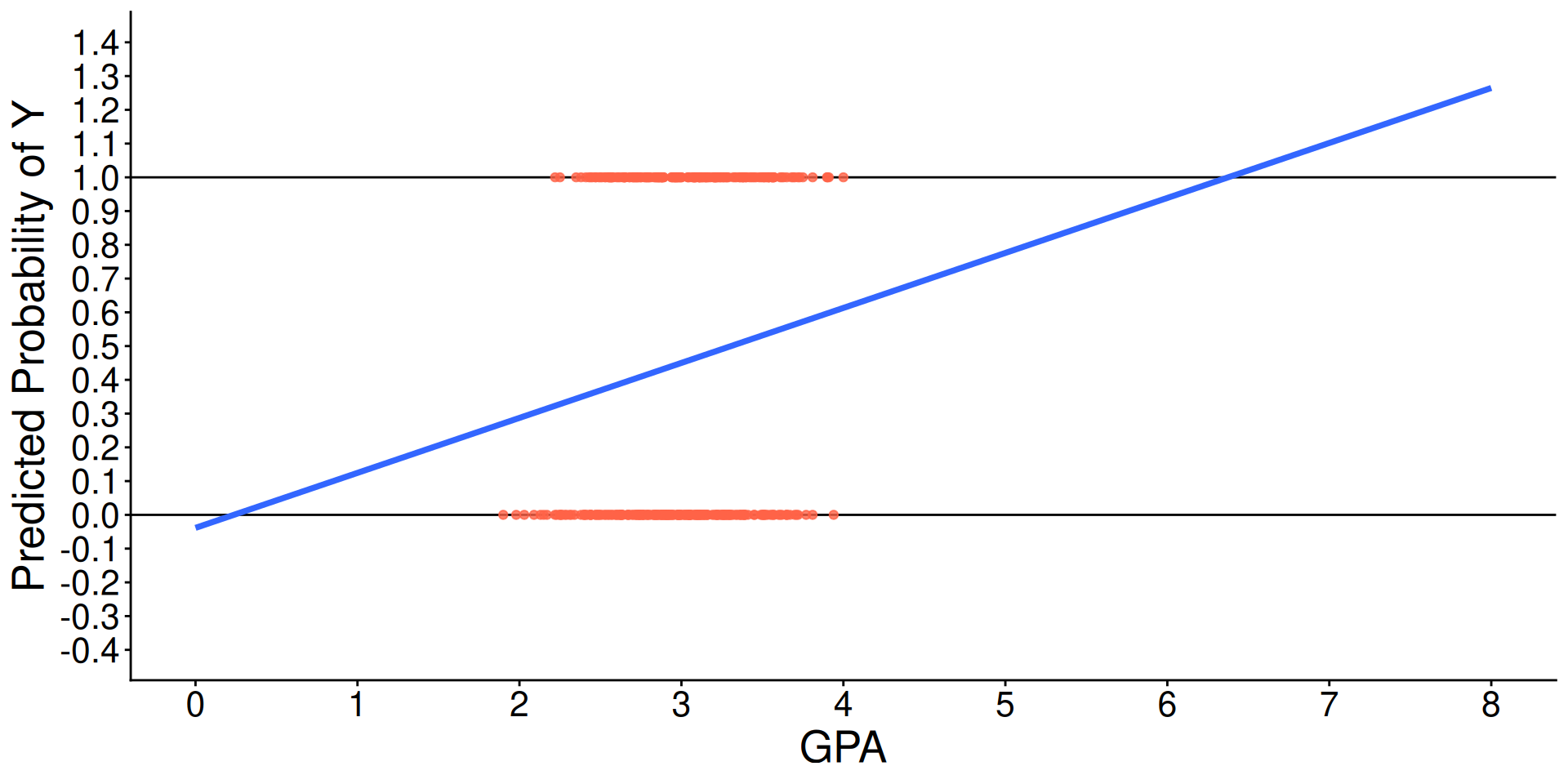

A General Linear Model With Binary Outcomes?

How can we have a linear relationship between X & Y?

Probability of a 1 is bounded between 0 and 1, but predicted probabilities from a linear model aren’t bounded

- Impossible values

The linear relationship needs to ‘shut off’ at the 0 and 1 boundaries, which requires a non-linear function.

Predicted Regression Line of GLM

⌘+C

library(ggplot2)

ggplot(dataLogit) +

aes(x = GPA, y = LLAPPLY) +

geom_hline(aes( yintercept = 1)) +

geom_hline(aes( yintercept = 0)) +

geom_point(color = "tomato", alpha = .8) +

geom_smooth(method = "lm", se = FALSE, fullrange = TRUE, linewidth = 1.3) +

scale_x_continuous(limits = c(0, 8), breaks = 0:8) +

scale_y_continuous(limits = c(-0.4, 1.4), breaks = seq(-0.4, 1.4, .1)) +

labs(y = "Predicted Probability of Y") +

theme_classic() +

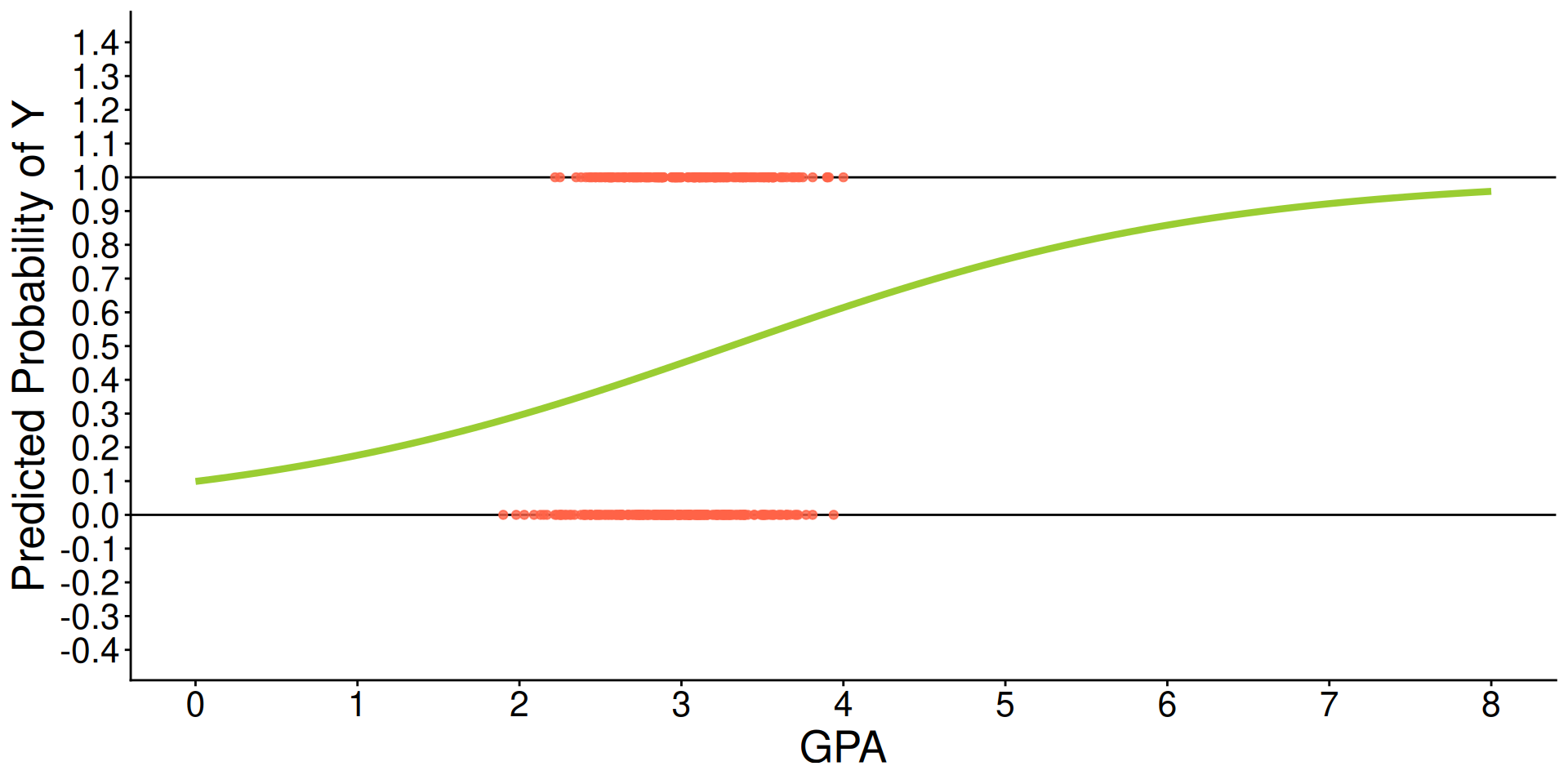

theme(text = element_text(size = 20))Predicted Regression Line of Logistic Regression

⌘+C

ggplot(dataLogit) +

aes(x = GPA, y = LLAPPLY) +

geom_hline(aes( yintercept = 1)) +

geom_hline(aes( yintercept = 0)) +

geom_point(color = "tomato", alpha = .8) +

geom_smooth(method = "glm", se = FALSE, fullrange = TRUE,

method.args = list(family = binomial(link = "logit")), color = "yellowgreen", linewidth = 1.5) +

scale_x_continuous(limits = c(0, 8), breaks = 0:8) +

scale_y_continuous(limits = c(-0.4, 1.4), breaks = seq(-0.4, 1.4, .1)) +

labs(y = "Predicted Probability of Y") +

theme_classic() +

theme(text = element_text(size = 20))3 Problems with GLM predicting binary outcomes

Assumption Violation problem: GLM for continuous, conditionally normal outcome = residuals can’t be normally distributed

Restricted Range problem (e.g., 0 to 1 for outcomes)

Predictors should not be linearly related to observed outcome

- Effects of predictors need to be ‘shut off’ at some point to keep predicted values of binary outcome within range

Decision-Making Problem: A GLM predicts a continuous value, but the research question is often binary (e.g., ‘will a student apply?’). It’s unclear how to make a decision from a continuous prediction that can be outside the 0-1 range.

- In contrast, a generalized linear model provides a probability (e.g., ‘a student has a 50% probability of applying’), which directly answers the question.

The Binary Outcome: Bernoulli Distribution

Bernoulli distribution has following properties

Notation: (where p is the conditional probability of a 1 for person p)

Sample Space: ( can either be a 0 or a 1)

Probability Density Function (PDF):

Expected value (mean) of Y:

Variance of Y:

is the only parameter – so we only need to provide a link function for it …

Generalized Models for Binary Outcomes

Rather than modeling the probability of a 1 directly, we need to transform it into a more continuous variable with a link function, for example:

We could transform probability into an odds ratio

Odds ratio (OR):

For example, if , then OR(1) = 2.33; OR(0) = .429

Odds scale is way skewed, asymmetric, and ranges from 0 to

- This is not a helpful property

Take natural log of odds ratio called “logit” link

For example, and

log(0.7 / (1 - 0.7))[1] 0.8472979log(0.3 / (1 - 0.3))[1] -0.8472979- Logit scale is now symmetric at

The logit link is one of many used for the Bernoulli distribution

- Names of others: Probit, Log-Log, Complementary Log-Log

From Logit Transformation to Logistic Regression

The link function for a logit is defined by:

where called link function, is the expectation of Y, is the linear combination of weights and predictors.

A logit can be translated back to a probability with some algebra:

From Equation 1 and Equation 2, we can know that has a range of [-, +], P(Y = 1) has a range of [0, 1].

Interpretation of Coefficients

# function to translate OR to Probability

OR_to_Prob <- function(OR){

p = OR / (1+OR)

return(p)

}

# function to translate Logit to Probability

Logit_to_Prob <- function(Logit){

OR <- exp(Logit)

p = OR_to_Prob(OR)

return(p)

}

Logit_to_Prob(Logit = -2.007) # p = .118[1] 0.1184699exp(-2.007)[1] 0.1343912Intercept :

Logit: We can say the predicted logit value of Y = 1 for an individual when all predictors are zero; i.e., the average logit is -2.007

Probability: Alternatively, we can say the expected value of probability of Y = 1 is when all predictors are zero; i.e., the average probability of applying to grad score is 0.118 (11.8%)

Odds: Alternatively, the expected odds of Y = 1 is when all predictors are zero. The average odds of applying to grad school is exp(-2.007) = 0.134.

Slope :

Logit: We can say the predicted increase of logit value of Y = 1 with one-unit increase of X;

Probability: We can say the expected increase of probability of Y = 1 is with one-unit increase of X; Note that the increase () is non-linear and dynamic given varied value of X.

Odds Ratio: For a one-unit increase in X, the odds of Y=1 are multiplied by a factor of . This factor is the odds ratio (OR). (Hint: The new odds are , which is times the old odds of )

Example: Fitting The Models

Model 0: The empty model for logistic regression with binary variable (applying for grad school) as the outcome

Model 1: The logistic regression model including centered GPA and binary predictors (Parent has graduate degree, Student Attends Public University)

Model 0: The empty model

The statistical form of empty model:

or

Many generalized linear models don’t explicitly show an error term. This is because the variance is a function of the mean (e.g., for a binary outcome, variance = p(1-p)).

For the logit function, has a logistic distribution with a zero mean and a variance as /3 = 3.29.

- Use

ordinalpackage andclm()function, we can model categorical dependent variables

- 1

-

The dependent variable must be stored as a

factor - 2

-

the

formulaanddataarguments are identical tolm; Thecontrol =argument is only used here to show iteration history of the ML algorithm

iter: step factor: Value: max|grad|: Parameters:

0: 1.000000e+00: 277.259: 2.000e+01: 0

nll reduction: 2.00332e+00

1: 1.000000e+00: 275.256: 6.640e-02: 0.2

nll reduction: 2.22672e-05

2: 1.000000e+00: 275.256: 2.222e-06: 0.2007

nll reduction: -5.68434e-14

3: 1.000000e+00: 275.256: 2.842e-14: 0.2007

Optimizer converged! Absolute and relative convergence criteria were metglm()

While clm() is powerful for ordinal models (with more than two ordered categories), a more direct function for binary logistic regression in R is glm() from the base stats package.

It’s important to note that clm() and glm() parameterize the intercept differently. - clm() estimates a threshold (). The intercept is . - glm() directly estimates the intercept ().

Therefore, the intercept from glm() will have the opposite sign of the threshold from clm().

Here is how you would fit the same empty model with glm():

# Alternative using glm()

model0_glm <- glm(LLAPPLY ~ 1, data = dataLogit, family = binomial(link = "logit"))

summary(model0_glm)

Call:

glm(formula = LLAPPLY ~ 1, family = binomial(link = "logit"),

data = dataLogit)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -0.2007 0.1005 -1.997 0.0459 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 550.51 on 399 degrees of freedom

Residual deviance: 550.51 on 399 degrees of freedom

AIC: 552.51

Number of Fisher Scoring iterations: 3Model 0: Result

summary(model0)formula: LLAPPLY ~ 1

data: dataLogit

link threshold nobs logLik AIC niter max.grad cond.H

logit flexible 400 -275.26 552.51 3(0) 2.84e-14 1.0e+00

Threshold coefficients:

Estimate Std. Error z value

0|1 0.2007 0.1005 1.997N = length(dataLogit$LLAPPLY)

p = sum(dataLogit$LLAPPLY == 1) / N

log(p / (1 - p))[1] -0.2006707The

clmfunction output Threshold parameter (labelled as ) rather than intercept ().The relationship between and is

Thus, the estimated is with SE = 0.1005 for model 0

The predicted logit is -0.2007; the predicted probability is

exp(-0.2007)/(1+exp(-0.2007))= 0.45The log-likelihood is -275.26; AIC is 552.51

Model 1: The conditional model

or

dataLogit$GPA3 <- dataLogit$GPA - 3

model1 = clm(LLAPPLY ~ PARED + GPA3 + PUBLIC, data = dataLogit)

summary(model1)formula: LLAPPLY ~ PARED + GPA3 + PUBLIC

data: dataLogit

link threshold nobs logLik AIC niter max.grad cond.H

logit flexible 400 -264.96 537.92 3(0) 3.71e-07 1.0e+01

Coefficients:

Estimate Std. Error z value Pr(>|z|)

PARED 1.0596 0.2974 3.563 0.000367 ***

GPA3 0.5482 0.2724 2.012 0.044178 *

PUBLIC -0.2006 0.3053 -0.657 0.511283

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Threshold coefficients:

Estimate Std. Error z value

0|1 0.3382 0.1187 2.849glm()

Here is the equivalent model using glm(). Notice the intercept has the opposite sign of the threshold from clm(), while the slope coefficients are identical.

# Alternative using glm()

model1_glm <- glm(LLAPPLY ~ PARED + GPA3 + PUBLIC, data = dataLogit, family = binomial(link = "logit"))

summary(model1_glm)

Call:

glm(formula = LLAPPLY ~ PARED + GPA3 + PUBLIC, family = binomial(link = "logit"),

data = dataLogit)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -0.3382 0.1187 -2.849 0.004383 **

PARED 1.0596 0.2974 3.563 0.000367 ***

GPA3 0.5482 0.2724 2.012 0.044178 *

PUBLIC -0.2006 0.3053 -0.657 0.511283

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 550.51 on 399 degrees of freedom

Residual deviance: 529.92 on 396 degrees of freedom

AIC: 537.92

Number of Fisher Scoring iterations: 4Model 1: Results

LL(Model 1) = -264.96 (LL(Model 0) = -275.26)

with

with

with

Understand the results

Question #1: does Model 1 fit better than the empty model (Model 0)?

This question is equivalent to test the following hypothesis:

We can use Likelihood Ratio Test:

DF = 4 (# of params of Model 0) - 1 (# of params of Model 1) = 3

p-value: p = .0001283

# anova can compare two models anova(model0, model1)Likelihood ratio tests of cumulative link models: formula: link: threshold: model0 LLAPPLY ~ 1 logit flexible model1 LLAPPLY ~ PARED + GPA3 + PUBLIC logit flexible no.par AIC logLik LR.stat df Pr(>Chisq) model0 1 552.51 -275.26 model1 4 537.92 -264.96 20.586 3 0.0001283 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1LL_mod0 = logLik(model0) LL_mod1 = logLik(model1) # Or we can use chi-square distribution to calculate p-value pchisq(-2 * (LL_mod0 -LL_mod1), 3, lower.tail = FALSE)'log Lik.' 0.0001282981 (df=1)Conclusion: Reject . The model with predictors is a significant improvement, so we prefer the conditional model (Model 1)

Question #2: Whether the effects of GPA, PARED, PUBLIC are significant or not?

Intercept :

Logit: The predicted logit of applying to grad school is -0.3382 for a person with a 3.0 GPA, parents without a graduate degree, and attending a private university.

Odds Ratio: The predicted odds of applying are for this person (When one has GPA 3.0 and other variables are at referenced level, the probability of applying grad school is 0.71 times the probability of not applying).

Probability: The predicted probability of applying is 41.62% for this person.

Slope of parents having a graduate degree: with

Logit: Having a parent with a graduate degree increases the logit of applying by 1.0596, holding other predictors constant.

Odds Ratio: The odds of applying for a student whose parents have a graduate degree are times higher than for a student whose parents do not, a nearly 3-fold increase in odds ratio.

Probability: For a student at a private university with a 3.0 GPA, having a parent with a graduate degree increases the probability of applying grad school from 41.62% to 67.29%.

## Interpret output

beta_0 <- -0.3382

beta_3 <- -0.2006

OR_p <- function(logit_old, logit_new){

c(

OR = exp(logit_old),

OR_new = exp(logit_new),

p = exp(logit_old) / (1 + exp(logit_old)),

p_new = exp(logit_new) / (1 + exp(logit_new))

)

}

Result <- OR_p(logit_old = beta_0, logit_new = (beta_0 + beta_3))

Result

Result[2] / Result[1]

cat(paste0("The odds ratio of applying for grad school for those in private schools is", round(Result[2] / Result[1], 2), " times than those in public schools."))

Result[4] - Result[3]

cat(paste0("The probability of applying for grad school for those in private schools decrease ", round(abs(Result[4] - Result[3])*100, 2), "% compared those in public schools.")) OR OR_new p p_new

0.7130527 0.5834480 0.4162468 0.3684668

OR_new

0.8182397

The odds ratio of applying for grad school for those in private schools is0.82 times than those in public schools. p_new

-0.04778001

The probability of applying for grad school for those in private schools decrease 4.78% compared those in public schools.Slope of GPA3: with

beta_2 <- 0.5482 Result_GPA2 <- OR_p(logit_old = beta_0, logit_new = (beta_0 + (-1) * beta_2)) Result_GPA3 <- OR_p(logit_old = beta_0, logit_new = (beta_0 + (0) * beta_2)) Result_GPA4 <- OR_p(logit_old = beta_0, logit_new = (beta_0 + 1 * beta_2)) Result_GPA2 Result_GPA3 Result_GPA4OR OR_new p p_new 0.7130527 0.4121368 0.4162468 0.2918533 OR OR_new p p_new 0.7130527 0.7130527 0.4162468 0.4162468 OR OR_new p p_new 0.7130527 1.2336781 0.4162468 0.5523079

- For every one-unit increase in GPA, the logit of applying for grad school increases by 0.5482. This corresponds to the odds of applying being multiplied by a factor of .

- The effect on probability is non-linear. For a student at a private university with no parental graduate degree, the probability of applying increases from 29.18% (GPA=2.0) to 41.62% (GPA=3.0) to 55.23% (GPA=4.0).

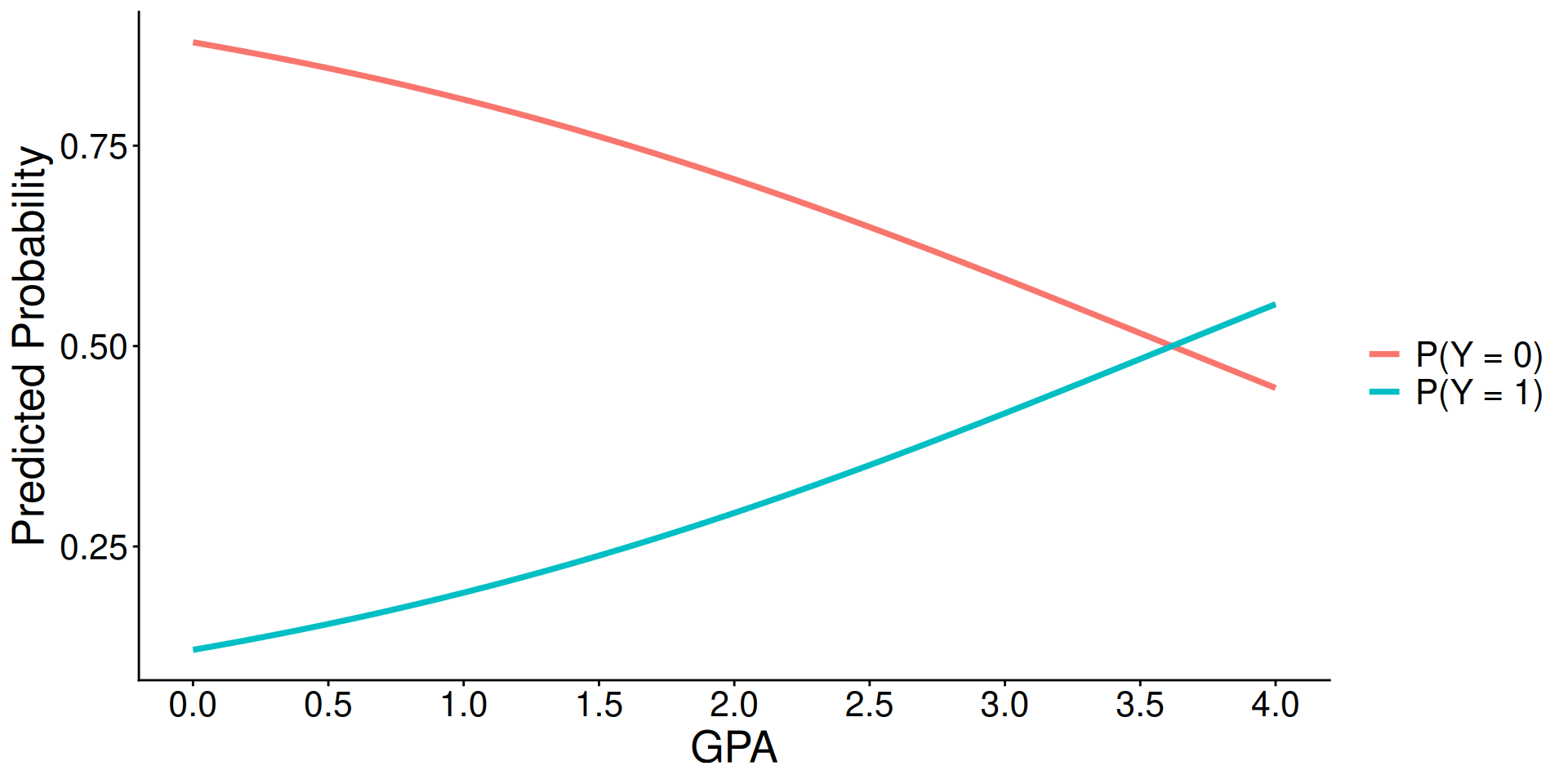

⌘+C

new_data <- data.frame(

GPA3 = seq(-3, 1, .1),

PARED = 0,

PUBLIC = 0

)

Pred_prob <- predict(model1, newdata=new_data)$fit

as.data.frame(cbind(GPA = new_data$GPA3+3, P_Y_0 = Pred_prob[,1], P_Y_1 = Pred_prob[,2])) |>

pivot_longer(starts_with("P_Y")) |>

ggplot() +

aes(x = GPA, y = value) +

geom_path(aes(group = name, color = name), linewidth = 1.3) +

labs(y = "Predicted Probability") +

scale_x_continuous(breaks = seq(0, 4, .5)) +

scale_color_discrete(labels = c("P(Y = 0)", "P(Y = 1)"), name = "") +

theme_classic() +

theme(text = element_text(size = 20))- For logistic models with two responses:

- Regression weights are now for LOGITS

- The direction of what is being modeled has to be understood (Y = 0 or = 1)

- The change in odds and probability is not linear per unit change in the IV, but instead is linear with respect to the logit

- Interactions will still modify the conditional main effects

- Simple main effects are effects when interacting variables = 0

Exercise: Interpreting Logistic Regression

Below is an R code chunk that simulates a dataset about student study hours and exam outcomes. Your task is to analyze this data.

You are given a dataset exam_data with two variables: study_hours and pass_exam (0 = Fail, 1 = Pass).

Your Tasks:

- Fit a logistic regression model to predict

pass_examfromstudy_hours. - Interpret the intercept (). What are the odds and probability of passing the exam for a student who studied for 0 hours?

- Interpret the slope (). What is the odds ratio associated with a one-hour increase in study time? How does this affect the odds of passing?

Use the R chunk below to write your code.

Here is the solution and interpretation.

Wrap up

Generalized linear models are models for outcomes with distributions that are not necessarily normal

The estimation process is largely the same: maximum likelihood is still the gold standard as it provides estimates with understandable properties

Learning about each type of distribution and link takes time:

- They all are unique and all have slightly different ways of mapping outcome data onto your model

Logistic regression is one of the more frequently used generalized models – binary outcomes are common

![{\displaystyle [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/738f7d23bb2d9642bab520020873cccbef49768d)

![{\displaystyle [0,N]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/703d57dca548a7f9d927247c2a27b67666aebdd5)